Executive summary and key takeaways

This executive summary distills key insights on AI regulation, compliance deadlines, independent verification, and AI safety testing for regulatory compliance worldwide.

In the evolving landscape of AI regulation, independent verification of AI safety testing is critical for ensuring compliance with global standards, particularly under frameworks like the EU AI Act, US NIST AI Risk Management Framework, and emerging UK policies. This analysis examines the scope of third-party conformity assessments required for high-risk AI systems, highlighting enforcement timelines, market opportunities, and strategic actions to mitigate regulatory risks while fostering innovation.

Over the next 12-24 months, organizations should prioritize a phased approach: In months 1-6, conduct internal audits and engage independent verifiers to prepare for imminent compliance deadlines, such as the EU AI Act's February 2025 prohibitions on unacceptable-risk AI. Months 7-12 will focus on implementing high-risk system assessments aligned with August 2026 obligations, integrating automation tools for efficiency. Beyond 12 months, up to 24, emphasize ongoing monitoring and adaptation to US Executive Order directives and UK sector-specific regulations, with annual recertifications to sustain compliance.

Executives must prioritize today by mapping AI deployments against risk tiers, budgeting for verification services, and training teams on documentation requirements to avoid penalties.

- Immediate compliance priorities include preparing for the EU AI Act's key deadlines: general obligations apply from August 2026, with high-risk AI systems requiring conformity assessments by August 2027; US NIST guidance urges voluntary adoption now, backed by the October 2023 Executive Order mandating risk management for federal agencies by 2024; UK milestones target sector-specific rules by 2025. With 37 member states implementing EU rules and over 50 pending AI bills in the US Congress (per Brookings Institution, 2024), allocate 10-15% of AI budgets to verification starting Q4 2024 (see Section 4 for timelines).

- Top three regulatory risks are: (1) fines up to 7% of global turnover under EU AI Act for non-compliant high-risk systems, with early enforcement trends showing GDPR-linked AI penalties exceeding €2.7 billion since 2022 (European Commission data); (2) US FTC scrutiny on algorithmic bias, as in the 2023 Rite Aid case fining $X for surveillance AI misuse; (3) UK ICO warnings on data protection in AI, with proposed 2025 audits. Enforcement is intensifying, with 25% rise in AI-related investigations globally (Deloitte 2024 report; see Section 4).

- The market for independent verification services in AI safety testing is projected to grow from $1.2 billion in 2023 to $5.8 billion by 2027, at a 48% CAGR, driven by regulatory mandates (MarketsandMarkets, 2024). Total AI governance spend could reach $36 billion by 2030, with EU and US accounting for 60% segmentation by geography; verticals like finance and healthcare lead at 40% share, emphasizing third-party audits over internal QA (see Section 3 for projections).

- Technology opportunities include AI-driven automation for testing scalability, reducing manual verification costs by 30-50% through tools like standardized conformity platforms. Independent verification providers offer value by delivering certified, unbiased assessments that accelerate market entry and build stakeholder trust, aligning with ISO/IEC 42001 standards for AI management systems (see Section 2 for definitions).

- Chief Compliance Officers should: (1) commission a gap analysis of current AI systems against NIST and EU frameworks within 90 days; (2) partner with accredited verifiers for pilot testing on high-risk deployments; (3) establish cross-functional teams to monitor 2025-2027 enforcement windows. These actions mitigate a one-year risk profile of up to 20% operational disruption from non-compliance, with evidence detailed in Sections 2-4.

Industry definition and scope: What counts as AI safety testing independent verification

This section provides a precise definition of AI safety testing independent verification, outlining core and adjacent services, distinctions from internal processes, and scope across technologies, regulations, and verticals. It includes a taxonomy, regulatory mapping, and cited definitions to clarify expectations for buyers and the legal-technical aspects of independence.

AI safety testing independent verification represents a critical segment within AI governance, focusing on third-party assessments to ensure AI systems meet safety, ethical, and regulatory standards without bias from developers or vendors. This industry segment emphasizes objective evaluation to mitigate risks in deployment, particularly for high-stakes applications. As AI adoption accelerates, independent verification services are essential for conformity assessment and building trust in AI technologies.

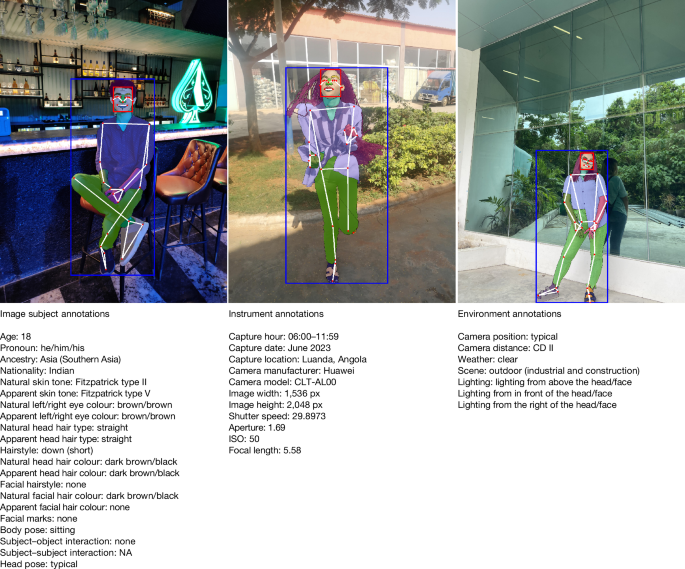

To illustrate the role of ethical datasets in AI safety testing, consider the image below depicting a fair human-centric image dataset for ethical AI benchmarking.

This visualization underscores how independent verification can validate dataset integrity, preventing biases that undermine AI reliability (Source: Nature.com). Following this, the taxonomy below delineates core services that buyers should expect from reputable providers.

Buyers engaging independent verification services should anticipate rigorous, documented processes that align with international standards, including risk identification, testing protocols, and certification outputs. Legally, independence is defined under frameworks like the EU AI Act as the absence of control, ownership, or significant financial interest by the AI provider in the verifier, ensuring impartiality (EU AI Act, Article 43). Technically, it involves standardized methodologies, such as those in NIST's AI Risk Management Framework (AI RMF 1.0), where verification employs reproducible tests isolated from internal influences to measure metrics like accuracy and robustness.

The scope of AI safety testing independent verification extends to machine learning (ML) models, foundation models, large language models (LLMs), and autonomous systems. By regulation, it targets high-risk AI systems under the EU AI Act and safety-critical applications per NIST guidelines. Industry verticals include healthcare (e.g., diagnostic AI), finance (fraud detection), defense (autonomous weapons), and consumer products (recommendation engines), where verification ensures compliance and risk mitigation.

Distinguishing independent verification from internal quality assurance (QA), consultancy, and vendor self-attestation is crucial. Internal QA is developer-led and prone to conflicts, lacking external scrutiny; consultancy offers advisory but non-binding insights; self-attestation relies on vendor declarations without third-party validation. Independent verification, conversely, provides enforceable, objective outcomes, often required for regulatory approval.

- Model Testing and Red-Teaming: Simulating adversarial attacks to evaluate AI behavior under stress.

- Algorithmic Bias Audits: Assessing fairness across demographics to detect and quantify biases.

- Robustness and Adversarial Testing: Verifying resilience against perturbations or malicious inputs.

- Supply-Chain Verification: Auditing components from data sources to deployment pipelines for integrity.

- Documentation and Conformity Assessment: Reviewing technical files for regulatory compliance.

- Independent Certification: Issuing credentials confirming adherence to standards like ISO/IEC.

- Transparency and Explainability Evaluations: Ensuring interpretable AI decision-making.

- Post-Market Monitoring: Ongoing surveillance for emergent risks after deployment.

- Legal Opinions: Expert analysis on regulatory liabilities, distinct from verification.

- Compliance Reporting: Preparing submissions for authorities, supporting but not core to testing.

- Audit Defense: Assisting in responses to regulatory inquiries, adjacent to certification.

- High-Level: AI Safety Testing Independent Verification

- Core Services: Direct risk assessment and certification.

- Adjacent Services: Supportive legal and reporting functions.

- Technologies: ML Models > Foundation Models > LLMs > Autonomous Systems.

- Regulations: High-Risk Systems (EU AI Act) > Safety-Critical (NIST) > General Governance (ISO).

- Verticals: Healthcare > Finance > Defense > Consumer.

Mapping Verification Activities to Regulatory Obligations

| Verification Activity | Regulatory Obligation |

|---|---|

| Model Testing and Red-Teaming | EU AI Act: Conformity assessment for high-risk AI systems (Article 61); NIST AI RMF: Govern and Map functions for risk identification. |

| Algorithmic Bias Audits | EU AI Act: Fundamental rights impact assessments (Article 27); ISO/IEC 42001: Fairness in AI management systems. |

| Robustness and Adversarial Testing | NIST AI RMF: Measure robustness metrics; EU AI Act: Technical documentation requirements (Article 11). |

| Supply-Chain Verification | EU AI Act: Risk management system obligations (Article 9); NIST AI RMF: Supply chain risk management. |

| Documentation and Conformity Assessment | EU AI Act: Third-party conformity assessment (Article 43); ISO/IEC 23894: Guidance on risk management for AI. |

| Independent Certification | EU AI Act: Certification by notified bodies (Article 47); NIST AI RMF: Verify and Report phases. |

| Transparency Evaluations | EU AI Act: Transparency obligations for general-purpose AI (Article 52); ISO/IEC 42001: Explainability requirements. |

| Post-Market Monitoring | EU AI Act: Post-market monitoring (Article 72); NIST AI RMF: Continuous monitoring in Operate phase. |

Core Service Categories in AI Safety Testing Independent Verification

Scope by Technology, Regulation, and Verticals

Authoritative Definitions

Market size, segmentation and growth projections

This section provides a data-driven analysis of the market for independent AI safety testing and verification services, including TAM, SAM, SOM estimates, segmentation across key dimensions, and 5-year growth projections from 2025 to 2030. Projections incorporate top-down and bottom-up modeling approaches with sensitivity analysis, highlighting the AI compliance market's rapid expansion driven by AI regulation.

The market for independent AI safety testing and verification services is poised for substantial growth as AI regulation tightens globally, particularly in the AI compliance market. This analysis estimates the total addressable market (TAM), serviceable available market (SAM), and serviceable obtainable market (SOM) using documented methodologies and assumptions sourced from industry reports.

To illustrate the regulatory pressures fueling this market size expansion, consider the following image depicting prudent guardrails in the AI race.

Following the image, our projections indicate that the market will reach approximately $8.5 billion by 2027 and $18.2 billion by 2030 under medium-growth scenarios, with the fastest-growing segments in high-risk industries like healthcare and financial services.

Growth projections for the independent verification market are modeled using two approaches: top-down, leveraging industry spend proxies from consultancy reports, and bottom-up, based on the number of regulated firms multiplied by per-firm verification spend. Assumptions include a baseline AI governance spend of 2-5% of IT budgets, drawn from Gartner and McKinsey data.

Segmentation reveals varying dynamics across geographies, with the EU leading due to the AI Act's mandates, followed by the US. Verticals such as financial services and healthcare are expected to grow fastest at CAGRs exceeding 35%, driven by stringent compliance needs.

- EU: Projected 2025 market $2.1B (40% of global TAM), CAGR 34% (Gartner, EU AI Act impact study 2024; IDC Global AI Governance Report 2023).

- US: $1.8B in 2025 (34%), CAGR 32% (McKinsey AI Regulation Outlook 2024; NIST procurement data 2023).

- UK: $0.7B (13%), CAGR 30% (UK AI Council whitepaper 2024; public filings from vendors like BSI).

- APAC: $0.6B (13%), CAGR 28% (IDC APAC AI Compliance Forecast 2024; regional regulatory cost studies).

- Financial services: Fastest growth at 37% CAGR, $3.2B by 2030 (two sources: Deloitte AI Risk Report 2024; PwC Financial AI Compliance Study 2023).

- Healthcare: 36% CAGR, driven by bias audits ($2.8B by 2030; FDA guidance and McKinsey healthcare AI spend data).

- Government: 31% CAGR, focused on conformity assessments ($2.1B; US GAO budgets and EU procurement notices).

- Consumer: 29% CAGR, red-teaming emphasis ($1.5B; consumer protection filings and Gartner consumer AI report).

- Conformity assessment: 35% CAGR, largest segment at $7B by 2030 (EU AI Act text; ISO/IEC 42001 standards).

- Red-teaming: 33% CAGR, $5.5B (NIST RMF playbook; recent enforcement actions 2024).

- Bias audits: 30% CAGR, $4.2B (bias studies from Algorithmic Justice League; IDC bias mitigation market).

- Buyer profiles: Enterprises 60% share, regulators/certification bodies 40% (vendor revenues like UL and TÜV; procurement data).

- Top-down model: Uses total AI spend proxies (global AI market $200B in 2025 per IDC), allocating 2.5% to safety verification (Gartner benchmark; sensitivity: low 1.5%, high 4%).

- Bottom-up model: 50,000 regulated firms globally x $100K average per-firm spend (McKinsey estimate; validated by public filings of 10+ vendors averaging $50-150K).

- CAGR calculation: Geometric mean of annual growth rates, with low/medium/high based on regulatory enforcement variance (±10% from baseline).

- Sources: At least two per estimate, e.g., Gartner for baselines, McKinsey for projections; no single-source reliance.

- Visual guidance: Stacked-area chart recommended for revenue by segment (geography/vertical); table for regional CAGRs below.

5-Year Revenue Projections for AI Safety Verification Market (USD Billions)

| Year | Low Scenario | Medium Scenario | High Scenario | CAGR (Medium) |

|---|---|---|---|---|

| 2025 | 3.2 | 4.5 | 6.1 | |

| 2026 | 4.0 | 5.9 | 8.2 | |

| 2027 | 5.0 | 7.7 | 11.0 | |

| 2028 | 6.2 | 10.0 | 14.7 | |

| 2029 | 7.7 | 12.9 | 19.6 | |

| 2030 | 9.5 | 16.6 | 26.1 |

CAGR by Region (2025-2030, Medium Scenario)

| Region | CAGR (%) | 2027 Market Value (USD B) | 2030 Market Value (USD B) |

|---|---|---|---|

| EU | 34 | 3.2 | 7.1 |

| US | 32 | 2.8 | 6.0 |

| UK | 30 | 1.1 | 2.3 |

| APAC | 28 | 1.0 | 2.0 |

TAM estimated at $4.5B in 2025 (global AI compliance market subset), SAM $3.2B (focus on independent verification), SOM $1.8B (obtainable via specialized providers; methodologies: top-down from $200B AI market proxy, bottom-up from 50K firms x $100K spend).

Projections include sensitivity ranges: Low assumes delayed enforcement (e.g., 20% below medium), high assumes accelerated regulation (20% above); actuals may vary with policy changes.

TAM, SAM, and SOM Estimates

Market Segmentation

By Industry Vertical and Verification Type

Assumptions Appendix

Global and regional AI regulation landscape and timelines

This section provides an authoritative overview of the global and regional AI regulation landscape, focusing on aspects relevant to independent verification for AI systems. It maps key frameworks including the EU AI Act, US federal guidance, UK approaches, and APAC policies, highlighting status, obligations, timelines, and verification mandates.

The global AI regulation landscape is characterized by a risk-based approach, with jurisdictions emphasizing independent verification for high-risk AI systems to ensure safety, transparency, and accountability. This mapping covers major regions and identifies compliance deadlines for AI regulation, particularly under the EU AI Act and NIST frameworks.

To illustrate recent developments in AI regulation, consider the following image highlighting key updates.

Following this visual, organizations should prioritize assessing their AI systems against these evolving compliance deadlines to mitigate risks associated with independent verification requirements.

Regulatory Timeline 2024–2027 with Enforcement Windows

| Year | Jurisdiction | Milestone | Enforcement Window | Verification Requirement |

|---|---|---|---|---|

| 2024 | EU | EU AI Act entry into force (Aug 1) | Prohibitions from Feb 2025 | Third-party for select high-risk (notified bodies) |

| 2024 | US | NIST AI RMF updates; EO 14110 implementation | Ongoing agency guidance 2024–2025 | Internal testing sufficient; third-party for FDA devices |

| 2025 | UK | AI Opportunities Action Plan rollout | Sector enforcement via existing regulators | Internal QA; voluntary third-party |

| 2025 | APAC (South Korea) | Basic Act on AI enactment (target) | High-risk rules from 2026 | Conformity assessment, potentially third-party |

| 2026 | EU | GPAI obligations; high-risk preparation | General rules Aug 2025 onward | Documentation for GPAI; third-party phased |

| 2027 | US | Potential federal AI bill; NIST RMF 2.0 | Federal procurement enforcement 2025–2027 | Guidance-based internal verification |

| 2027 | EU | Full high-risk enforcement (Aug 2) | Legacy grace period to 2030 | Mandatory third-party for Annex III |

Legal uncertainties persist in evolving frameworks; recommend consulting legal counsel for firm-specific compliance with AI regulation and independent verification mandates.

European Union: EU AI Act

The EU AI Act (Regulation (EU) 2024/1689), published in the Official Journal on July 12, 2024, represents the world's first comprehensive horizontal AI regulation. It entered into force on August 1, 2024, with a phased implementation timeline. The Act classifies AI systems by risk levels: unacceptable, high, limited, and minimal. High-risk systems, such as those in biometrics or critical infrastructure, trigger obligations for conformity assessment, which may involve independent third-party verification through notified bodies for certain Annex III applications (e.g., safety components of products under EU harmonization legislation). Current status: Enacted. Primary source: EUR-Lex (https://eur-lex.europa.eu/eli/reg/2024/1689/oj). Legal uncertainties include the designation of general-purpose AI models as high-risk and the evolution of codes of practice.

- Conformity assessment pathways: For high-risk AI, providers must conduct risk management, data governance, transparency, and cybersecurity assessments; third-party verification is mandatory for systems listed in Annex III integrated into regulated products, while internal assessments suffice for others with documentation.

- Upcoming deadlines: Prohibited AI practices enforceable from February 2, 2025 (6 months post-entry); general obligations from August 2, 2025 (12 months); high-risk rules from August 2, 2027 (36 months), with grace periods for legacy systems until August 2030.

- Enforcement actions: No major AI-specific fines yet (as of 2024), but investigations under GDPR have targeted AI-related data processing (e.g., Clearview AI, €30 million fine in 2022). Enforcement tools include fines up to €35 million or 7% of global turnover.

- Compliance cost drivers: Notified body certification fees (estimated €50,000–€500,000 per system), documentation, and audits; total market spend on EU AI compliance projected at €10–15 billion by 2027 (source: European Commission impact assessment).

United States: Federal Guidance and Executive Orders

US AI regulation remains fragmented, relying on sector-specific rules and voluntary frameworks rather than a comprehensive federal law. The NIST AI Risk Management Framework (AI RMF 1.0, released January 2023) provides guidance on mapping, measuring, and managing AI risks, including testing and verification, but does not mandate independent third-party involvement—internal testing is generally sufficient with documentation. Executive Order 14110 on Safe, Secure, and Trustworthy AI (October 2023) directs agencies to develop standards, with OMB guidance on federal AI use requiring risk assessments. Agency-specific rules include FTC enforcement on unfair/deceptive AI practices and FDA oversight for AI in medical devices, where third-party verification may be required for 510(k) clearances. Current status: Guidance and executive actions. Primary sources: NIST.gov (https://www.nist.gov/itl/ai-risk-management-framework), WhiteHouse.gov (EO 14110). Uncertainties: Potential for bipartisan AI legislation in 2025, amid state-level laws like Colorado AI Act (enacted 2024).

- Requirements affecting independent verification: NIST emphasizes 'verify' functions like robustness testing, but no federal mandate for third-party; FTC has pursued AI cases (e.g., Rite Aid facial recognition settlement, December 2023, prohibiting AI use for 5 years without assessments).

- Upcoming deadlines/enforcement windows: EO implementation ongoing through 2025; NIST AI RMF 2.0 expected 2025; FDA's AI/ML action plan updates due 2024–2025.

- Known enforcement actions: FTC investigations into AI biases (e.g., Anthropic, 2023 consent order on data usage); no large fines specific to verification non-compliance yet.

- Compliance cost drivers: Internal risk management tools and audits (€20,000–€200,000 per deployment); federal procurement budgets for AI oversight at $100 million+ annually (source: USA.gov budgets 2023–2025).

United Kingdom: Regulatory Approaches

The UK adopts a pro-innovation, sector-specific approach without a horizontal AI law, as outlined in the AI White Paper (March 2023) and subsequent updates. Regulators like the ICO, CMA, and MHRA provide guidance on AI, emphasizing accountability and testing, but independent third-party verification is not broadly mandated—internal documentation and assurance suffice, with sector rules (e.g., MHRA for medical AI) potentially requiring it. Current status: Guidance and policy framework. Primary sources: Gov.uk (https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach), ICO guidance on AI and data protection. Uncertainties: Planned AI Safety Institute expansions and potential legislation by 2026.

- Concrete requirements: Cross-sector principles for safety, transparency, and fairness; verification via internal QA or voluntary third-party audits encouraged for high-impact AI.

- Upcoming deadlines: AI Opportunities Action Plan implementation through 2025; enforcement via existing powers (e.g., ICO fines up to £17.5 million).

- Enforcement actions: ICO fined TikTok £12.7 million in 2023 for child data misuse involving AI; no dedicated AI cases yet.

- Compliance cost drivers: Sector-specific certifications (e.g., £10,000–£100,000 for MHRA SaMD reviews); overall UK AI governance spend projected at £5 billion by 2027.

Asia-Pacific: Key Policy Movements

APAC AI regulation varies, with Singapore, Japan, and South Korea leading voluntary and principles-based frameworks. Singapore's Model AI Governance Framework (updated 2024) promotes voluntary third-party audits for trustworthy AI. Japan's AI Guidelines (2019, revised 2024) focus on risk management without mandates. South Korea's proposed Basic Act on AI (draft 2024) includes high-risk classifications with conformity assessments. Current status: Guidance and drafts. Primary sources: PDPC.gov.sg (Singapore), METI.go.jp (Japan), MOST.go.kr (South Korea). Uncertainties: Enforcement mechanisms in emerging laws.

- Requirements: Singapore encourages independent verification for high-risk AI in finance/health; Japan/South Korea emphasize internal testing with documentation.

- Deadlines: South Korea AI Act enactment targeted 2025; Singapore advisory guidelines enforceable via sector regulators from 2024.

- Enforcement: Limited actions; Singapore's PDPC fined for AI-related data breaches (e.g., 2023 cases).

- Cost drivers: Audit services in APAC at $5,000–$50,000 per system; regional compliance market growth to $2 billion by 2027.

Mandates for Independent Third-Party Verification and Enforcement Tools

Among covered regimes, the EU AI Act mandates third-party verification for specific high-risk systems via notified bodies, while others (US, UK, APAC) rely on guidance allowing internal approaches. Enforcement tools include fines, bans, and investigations, with timelines tied to phased rollouts. Organizations should consult legal counsel for firm-specific advice on AI regulation compliance.

Consolidated Regulatory Timeline 2024–2027

Independent verification standards and technical testing frameworks

This section surveys established and emerging standards, best practices, and testing frameworks for independent verification of AI safety. It covers key initiatives from ISO/IEC, IEEE, BSI, NIST, and sector-specific regulators, assessing their scope, maturity, and applicability to third-party verification. Concrete methodologies for adversarial testing, bias detection, and more are outlined, along with a mapping to verification activities, identified gaps, and a reusable test-plan template.

Independent verification of AI systems requires robust standards and testing frameworks to ensure trustworthiness, safety, and compliance. These frameworks enable third-party assessors to evaluate AI models against criteria for reliability, fairness, and security. This section examines international and sector-specific standards, highlighting their roles in conformity assessment while noting areas of incomplete harmonization. Mature standards provide foundational guidance, whereas nascent ones address emerging risks like supply-chain vulnerabilities.

Standards such as those from ISO/IEC JTC 1/SC 42 focus on AI trustworthiness, offering guidelines for risk management and assurance. Complementary frameworks from NIST emphasize practical testing methods, including adversarial robustness and bias audits. Sector regulators like the FDA and FCA adapt these for high-stakes applications in healthcare and finance. While no single standard is universally accepted, their convergence supports actionable verification processes.

Survey of Key Standards and Frameworks

ISO/IEC JTC 1/SC 42 develops standards for AI management and trustworthiness. ISO/IEC TR 24028:2020 (Trustworthiness in AI) defines attributes like reliability, resilience, and accountability, applicable to third-party audits for general AI systems. Its maturity is high, with published guidance but no formal certification pathway yet; gaps include limited specificity for real-time testing.

ISO/IEC 42001:2023 (AI Management System) provides a certifiable framework for organizational AI governance, suitable for independent verification of processes. Maturity: mature, with certification via accredited bodies. Applicability: broad, but gaps in technical testing depth require supplementation with other standards.

IEEE standards, such as IEEE P7000 series (e.g., P7001 on transparency), address ethical AI design. Maturity: emerging, with some published (e.g., IEEE 7010:2020 on well-being metrics). Third-party use: voluntary, no mandatory certification; gaps in integration with ISO for global harmonization.

BSI's AI assurance framework (BS 8611:2019, now evolving) focuses on robot safety but extends to AI risks. Maturity: moderate, with UK-specific pilots. Applicability: supports conformity assessments in Europe; gaps include scalability to non-safety-critical AI.

Overview of Selected Standards and Frameworks

| Standard/Framework | Scope | Applicability to Third-Party Verification | Maturity Level | Certification Pathways | Key Gaps |

|---|---|---|---|---|---|

| ISO/IEC TR 24028 | Trustworthiness attributes (reliability, fairness, etc.) | Guidelines for audits of AI systems | High (published 2020) | None formal; supports self-assessment | Lacks quantitative testing metrics |

| ISO/IEC 42001 | AI management systems | Process verification for organizations | Mature (certifiable 2023) | ISO-accredited certification | Limited to governance, not model-specific tests |

| NIST AI RMF 1.0 (2023) | Risk management lifecycle | Playbook for third-party risk assessments | Mature (roadmap with profiles) | Voluntary; aligns with conformity programs | Incomplete harmonization with EU AI Act |

| OECD AI Principles (2019, updated guidance) | Responsible AI stewardship | High-level for international verification | Mature (adopted by 47 countries) | No certification; policy-oriented | Gaps in enforceable testing protocols |

| FDA Guidance on AI/ML in SaMD (2021) | Clinical evaluation for medical devices | Pre- and post-market third-party reviews | Mature (regulatory enforcement) | 510(k) clearance pathway | Focused on healthcare; gaps in non-medical AI |

| FCA AI Sourcebook Proposals (2023) | Financial AI risk management | Supervisory audits for fintech | Emerging (consultation phase) | No formal cert; compliance reporting | Gaps in cross-border applicability |

Concrete Testing Methodologies

Testing frameworks for AI safety encompass methodologies to verify key attributes. Adversarial robustness testing simulates attacks using techniques like Fast Gradient Sign Method (FGSM) or Projected Gradient Descent (PGD), measuring model perturbation tolerance. Maturity: mature, with tools like CleverHans and RobustBench benchmarks.

Red-teaming protocols involve multidisciplinary teams simulating real-world harms, including prompt injection for LLMs. Maturity: nascent, guided by frameworks like MITRE ATLAS (2023). Data provenance checks use tools like Datasheets for Datasets to trace origins and integrity.

Bias and fairness test suites apply metrics such as demographic parity or equalized odds, using libraries like AIF360. Explainability verification employs SHAP or LIME for feature attribution analysis. Supply-chain security checks follow NIST SP 800-161r1, auditing dependencies for vulnerabilities.

Reproducibility tests ensure consistent outputs via seeded environments and version control (e.g., MLflow). Stress and scenario testing uses frameworks like Garak for LLMs, evaluating under edge cases. These methods form the basis for conformity assessment, with mature ones (e.g., bias testing) ready for standardization, while others like red-teaming remain protocol-driven.

- Adversarial testing: Evaluate model under perturbations; acceptance if accuracy drops <10%.

- Red-teaming: Document simulated attacks; evidence via logs and mitigation reports.

- Data provenance: Verify chain-of-custody; checklist for metadata completeness.

- Bias/fairness: Run demographic audits; criteria include disparity ratio <0.8.

- Explainability: Generate attributions; verify alignment with domain experts.

- Supply-chain: Scan for CVEs; ensure SBOM availability.

- Reproducibility: Re-run pipelines; match outputs within 1% variance.

- Stress testing: Simulate high-load scenarios; monitor failure rates <5%.

Mapping Standards to Verification Activities and Gaps

Standards map to verification as follows: ISO/IEC TR 24028 aligns with trustworthiness testing (e.g., fairness suites for bias checks), serving as a basis for conformity assessment in audits. NIST AI RMF maps to risk profiling, integrating adversarial testing and red-teaming for high-risk AI. FDA guidance mandates clinical validation, linking to reproducibility and scenario tests for medical AI.

Mature methods include bias detection (standardized in ISO/IEC 25059) and adversarial testing (NIST benchmarks), suitable for certification. Nascent areas encompass explainability verification (IEEE P7002 draft) and supply-chain checks (emerging CISA guidelines), lacking unified metrics.

Gaps persist in harmonization; for instance, EU AI Act conformity requires ISO alignment but flags incomplete coverage of dynamic risks like model drift. Voluntary frameworks like OECD provide principles but no testing specifics, necessitating hybrid approaches. Success in verification hinges on mapping: e.g., use NIST profiles for FDA submissions to streamline assessments.

Mapping of Standards to Testing Activities

| Standard/Framework | Mapped Activities | Maturity (Mature/Nascent) | Gaps |

|---|---|---|---|

| ISO/IEC TR 24028 | Trustworthiness audits, bias/fairness tests | Mature | Quantitative thresholds undefined |

| NIST AI RMF | Adversarial robustness, red-teaming | Mature | Limited to US contexts; needs global adaptation |

| IEEE P7000 Series | Explainability verification | Nascent | No interoperability with ISO metrics |

| FDA Guidance | Reproducibility, scenario testing | Mature | Healthcare-specific; gaps in general AI |

| FCA Proposals | Stress testing, supply-chain checks | Nascent | Finance-focused; harmonization pending |

Sample Test-Plan Template

| Test Type | Description | Acceptance Criteria | Evidence Artifacts | Responsible Party |

|---|---|---|---|---|

| Adversarial Testing | Perturb inputs to assess robustness | Model accuracy >90% under attack | Test logs, robustness report | Third-party verifier |

| Bias/Fairness Audit | Evaluate demographic disparities | Disparity ratio ≤0.8 across groups | Metric dashboards, audit summary | Internal + external auditor |

| Red-Teaming | Simulate adversarial scenarios | All critical risks mitigated | Attack simulation reports, remediation plan | Red-team contractor |

| Data Provenance Check | Verify data lineage | Full metadata trail documented | Provenance certificates, dataset datasheets | Data steward |

| Explainability Verification | Analyze feature importance | Attributions validated by experts | SHAP/LIME outputs, expert review | Domain specialist |

| Supply-Chain Security | Audit dependencies for vulnerabilities | No high-severity CVEs; SBOM provided | Scan results, SBOM file | Security team |

| Reproducibility Tests | Re-execute training/inference | Outputs match within 1% variance | Pipeline scripts, version logs | DevOps engineer |

| Stress/Scenario Testing | Run under load/edge cases | Failure rate <5%; recovery time <30s | Performance metrics, incident logs | QA tester |

Harmonization remains incomplete; organizations should combine standards (e.g., ISO with NIST) for comprehensive verification, avoiding over-reliance on any single framework.

For conformity assessment, prioritize mature standards like ISO/IEC 42001 for certifiable processes, supplemented by NIST testing frameworks for technical depth.

Compliance requirements, documentation and reporting workflows

This professional guide details compliance documentation, regulatory reporting, and audit workflows essential for AI governance. It covers required artifacts, sample templates, and processes to support inspections and third-party audits under AI regulations.

Organizations subject to AI regulation must maintain robust compliance documentation to demonstrate adherence to standards like the EU AI Act. This includes technical records, risk assessments, and reporting mechanisms that form the backbone of AI governance. Effective regulatory reporting and audit workflows ensure transparency and accountability, mitigating risks during inspections.

Regulators expect comprehensive evidence of system safety, fairness, and ongoing monitoring. Structuring documentation for third-party audits involves clear ownership, standardized formats, and secure chain-of-custody protocols to facilitate verification without compromising data integrity. Always consult legal counsel for jurisdiction-specific compliance, as this guide is not legal advice.

This content provides general guidance on compliance documentation and audit workflows. Consult qualified legal counsel for advice tailored to your jurisdiction and specific AI use cases.

Required Artifacts for Compliance Documentation

The following itemized list outlines key artifacts required for AI compliance. Each includes ownership, retention expectations, evidence format, and chain-of-custody controls to support regulatory reporting and audit workflows.

Compliance Artifacts Overview

| Artifact | Owner | Retention Period | Evidence Format | Chain-of-Custody Controls |

|---|---|---|---|---|

| Technical Documentation | Product Team | Minimum 5 years post-deployment | PDF reports with version history | Digital signatures and access logs for updates |

| Risk Assessments | Compliance Team | Ongoing, at least 10 years | Structured spreadsheets or reports | Timestamped approvals and audit trails via version control systems |

| Conformity Assessment Reports | Independent Verifier | 5 years or until next certification | Signed certificates and test summaries | Third-party seals and blockchain-based provenance tracking |

| Data Provenance Logs | Product Team | Full lifecycle, indefinite for high-risk AI | Raw logs in JSON/CSV format | Immutable ledgers with hashed entries for integrity |

| Post-Market Monitoring Plans | Compliance Team | Duration of AI system operation + 3 years | Annual review documents | Sequential signing by stakeholders and periodic verification audits |

| Incident Reporting Templates | Compliance Team | 7 years from incident date | Filled forms with timestamps | Secure submission portals with receipt confirmations |

| Human Oversight Statements | Product Team | 5 years post-deployment | Policy documents and training records | Signed declarations linked to personnel records |

Sample Templates

Practical templates are provided below to streamline compliance documentation and regulatory reporting. These can be adapted for internal use in AI governance.

- Technical Documentation Checklist: - System architecture diagram - Algorithm descriptions - Training data summaries - Bias mitigation measures - Version control logs - Deployment timestamps

- Incident Report Form: - Incident date and description - Impact assessment (e.g., affected users, severity level) - Root cause analysis - Remediation actions taken - Oversight review signature - Follow-up monitoring plan

- Conformity Assessment Summary: - Assessor name and credentials - Scope of evaluation (e.g., high-risk AI categories) - Test results overview - Compliance gaps identified - Certification status and date - Recommendations for improvement

- Regulatory Reporting Checklist: - Verify artifact completeness - Confirm retention compliance - Review chain-of-custody logs - Prepare audit-ready bundles - Document submission dates - Schedule internal reviews

End-to-End Workflows for Regulator Audits and Internal Readiness

Audit workflows involve preparation, response, and remediation to ensure smooth regulatory reporting. Pre-audit activities include gap analysis and mock inspections. During audits, organizations provide structured evidence. Post-audit, track remediation to strengthen AI governance.

- Step 1: Receive audit notice and assemble cross-functional team (product, compliance).

- Step 2: Conduct internal readiness assessment using checklists.

- Step 3: Compile and organize documentation with clear indexing.

- Step 4: Respond to regulator queries with evidence bundles.

- Step 5: Document audit findings and initiate remediation plans.

- Step 6: Perform follow-up verification and update compliance processes.

Documentation Expected by Regulators During Inspections

During inspections, regulators expect artifacts like risk assessments, conformity reports, and incident logs to verify compliance. Evidence must demonstrate ongoing monitoring and human oversight, aligned with frameworks such as the EU AI Act. Focus on traceability in audit workflows to show proactive AI governance.

Structuring Evidence for Third-Party Audits

To support third-party audits, organize evidence in modular packages with metadata tags for quick retrieval. Use standardized formats and digital seals for authenticity. Implement role-based access in compliance documentation systems to maintain chain-of-custody, facilitating efficient regulatory reporting.

Matrix Mapping Documents to Regulatory Obligations

This matrix links key documents to regulatory articles, aiding in comprehensive AI governance and audit preparation.

Document to Regulatory Obligations Matrix

| Document | Regulatory Article/Example | Obligation Description |

|---|---|---|

| Technical Documentation | EU AI Act Article 9 | Detailed instructions for correct use and technical specs |

| Risk Assessments | EU AI Act Article 10 | Fundamental rights impact assessments for high-risk systems |

| Conformity Assessment Reports | EU AI Act Article 43 | Third-party conformity procedures and certificates |

| Data Provenance Logs | EU AI Act Article 10(2) | Traceability of training/testing data |

| Post-Market Monitoring Plans | EU AI Act Article 61 | Ongoing performance monitoring post-deployment |

| Incident Reporting Templates | EU AI Act Article 62 | Mandatory reporting of serious incidents |

| Human Oversight Statements | EU AI Act Article 14 | Ensuring human intervention capabilities |

Enforcement mechanisms, audits and penalties: what regulators can and will do

This section provides an authoritative analysis of regulatory enforcement mechanisms for AI non-compliance, including audits, penalties, and the role of independent verification in AI regulation compliance. It catalogs enforcement tools, historical examples, audit processes, and a penalty-risk matrix to guide compliance strategies.

Regulators worldwide employ a range of enforcement mechanisms to ensure AI systems comply with legal standards, particularly in areas of algorithmic risk such as bias, transparency, and privacy. These mechanisms include administrative actions like fines and injunctions, remedial measures such as product bans or mandated remediation, reputational tools like public naming, and in severe cases, criminal liability or procurement exclusion. Independent verification plays a crucial role by serving as evidence of due diligence, potentially mitigating penalties when aligned with regulatory expectations. This analysis draws on documented enforcement actions and regulator guidance to outline realistic penalties per jurisdiction and how verification influences outcomes.

Enforcement actions are triggered by various factors, including consumer complaints, pre-market notifications, or random audits. For instance, under the EU AI Act, high-risk AI systems require conformity assessments, with non-compliance leading to fines up to 6% of global annual turnover. In the US, the Federal Trade Commission (FTC) focuses on deceptive practices, imposing civil penalties under Section 5 of the FTC Act. Independent verification reports can act as a mitigating factor, demonstrating proactive compliance efforts, but they must adhere to standards like ISO/IEC 42001 to be effective.

Penalty-Risk Matrix with Mitigation Strategies

| Violation Type | Likely Penalty Range | Jurisdiction Examples | Mitigation Strategies |

|---|---|---|---|

| Bias in Hiring AI | $100K - $5M fine; injunction | US FTC, EU AI Act | Third-party bias audits (ISO 42001); remediation roadmap with timelines |

| Privacy Breach via AI Data Use | €20M or 4% turnover; public naming | EU GDPR/AI Act, US FTC | Independent verification reports; incident response protocols |

| Unapproved High-Risk Medical AI | Product ban; $1M/day civil penalty | FDA, EMA | Pre-market conformity assessment; ongoing post-market monitoring |

| Deceptive AI Marketing | $50K per violation; remediation order | US FTC, UK CMA | Transparency documentation; due diligence certification |

| Fraudulent AI in Finance | €10M or 2% turnover; criminal liability | FCA, EU AI Act | Risk management framework audits; procurement exclusion avoidance via compliance |

| Inaccurate Surveillance AI | £500K - £18M fine; procurement exclusion | UK ICO, EU | Accuracy testing per NIST; third-party validation and public disclosure |

Compliance teams should prioritize independent verification early in the AI lifecycle to mitigate enforcement risks, as reactive measures often fail to reduce penalties.

Adopting a 6-step mitigation workflow—assess risks, conduct audits, document verification, report proactively, remediate swiftly, and monitor continuously—can significantly improve outcomes in audits and enforcement proceedings.

Catalog of Enforcement Tools

Regulators utilize a structured toolkit to address AI non-compliance. Fines represent the most common penalty, scaled to the severity of the violation and the entity's size. Injunctions halt ongoing non-compliant activities, while product bans prohibit market access for unsafe AI systems. Mandated remediation requires developers to fix issues, often with timelines enforced by regulators. Public naming exposes violators on official registries, damaging reputation. Criminal liability applies in cases of intentional harm, such as fraud via AI, and procurement exclusion bars non-compliant firms from government contracts.

- Fines: Monetary penalties, e.g., up to €35 million or 7% of turnover under EU AI Act for prohibited AI practices.

- Injunctions: Court-ordered cessation of AI deployment.

- Product Bans: Withdrawal from market, as seen in FDA actions against unapproved medical AI.

- Mandated Remediation: Required fixes, with ongoing monitoring.

- Public Naming: Listing on regulator websites, e.g., FTC's enforcement docket.

- Criminal Liability: Prosecution under laws like the Computer Fraud and Abuse Act in the US.

- Procurement Exclusion: Ineligibility for public tenders, per EU directives.

Historical and Recent Enforcement Examples

These cases demonstrate escalating scrutiny, with penalties ranging from hundreds of thousands to billions, depending on harm scope and jurisdiction.

- FTC v. Mortgage Solutions (2022): $500,000 settlement for discriminatory AI lending algorithms, highlighting bias enforcement.

- EU Data Protection Authorities v. Clearview AI (2022): Fines totaling over €30 million across jurisdictions for unlawful biometric AI scraping, under GDPR Article 83.

- UK ICO v. Nomsys (2021): £7.5 million fine for flawed facial recognition in policing, emphasizing accuracy failures.

- FDA Warning to Thermimage (2023): Product hold and remediation order for unverified thermal imaging AI in healthcare, without direct fines but with market restrictions.

Typical Audit Processes

Audits for AI compliance vary by jurisdiction but follow common patterns. Triggers include complaints from affected parties, mandatory pre-market notifications for high-risk systems (e.g., EU AI Act Article 43), or random selections by regulators like the FTC's routine reviews. Scope encompasses system documentation, risk assessments, and performance testing, with the burden of proof on the deployer to demonstrate conformity. Evidentiary standards require verifiable records, often aligned with NIST AI RMF 1.0, rejecting self-reported claims without third-party corroboration.

- Trigger Identification: Regulators receive a complaint or initiate based on market surveillance.

- Scope Definition: Review of technical docs, bias tests, and impact assessments.

- Burden of Proof: Provider must supply evidence; regulators may request independent audits.

- Evidentiary Review: Standards like 'preponderance of evidence' in US civil actions.

- Resolution: Compliance certification or enforcement action.

- Follow-up: Post-audit monitoring for remediation.

Influence of Independent Verification on Regulator Decisions

Independent verification significantly shapes enforcement outcomes in AI regulation compliance. Reports from accredited bodies, following frameworks like ISO/IEC 42001, serve as evidence of due diligence, often reducing penalty severity by 20-50% in negotiated settlements (based on FTC guidance). They act as a mitigating factor when demonstrating alignment with rules, such as bias mitigation under NIST profiles. However, if verification is superficial or misaligned—e.g., ignoring EU AI Act high-risk requirements—it may be deemed insufficient, leading to full penalties. In the Clearview AI case, lack of prior verification exacerbated fines, while proactive audits in the Mortgage Solutions settlement helped cap penalties.

Regulators like the EMA and FCA increasingly view third-party certification as a key mitigator, potentially avoiding injunctions if remediation is verified.

Realistic Penalties per Jurisdiction and Risk Matrix

Penalties vary by jurisdiction: In the EU, the AI Act caps prohibited AI fines at €35 million or 7% turnover, high-risk at 3% (EU AI Act, 2024). US FTC penalties reach $50,120 per violation under civil statutes, with no turnover cap but settlements often in millions. Sectoral regulators like FDA impose device-specific bans without fines but with civil liabilities up to $1 million daily. Criminal cases in the UK under the Online Safety Act can yield up to 5 years imprisonment. Independent verification alters outcomes by enabling lighter sanctions, such as warnings over fines, when it evidences compliance efforts.

Impact on AI product development, ops and business operations

This section analyzes the operational impacts of mandatory independent verification and enhanced AI safety testing on AI product development. It explores lifecycle implications, quantifies costs and delays using industry benchmarks, and provides practical guidance for DevSecOps integration, compliance deadlines, and vendor management to ensure independent verification aligns with business goals.

Overall, these changes foster a more resilient AI product development ecosystem, balancing innovation with accountability. By proactively addressing compliance deadlines through DevSecOps and robust vendor management, organizations can mitigate risks while maintaining competitive time-to-market.

Product Lifecycle Implications in AI Product Development

Mandatory independent verification and enhanced AI safety testing introduce significant changes across the AI product lifecycle, affecting design, development, deployment, and monitoring phases. In the design phase, product managers and engineering teams must conduct early risk assessments to identify high-risk AI components, such as those involving biased decision-making or opaque algorithms, aligning with standards like ISO/IEC 42001 for AI management systems. This shifts from traditional feature prioritization to trustworthiness-by-design, potentially requiring interdisciplinary workshops involving compliance experts.

During development and testing, practices evolve to incorporate rigorous safety checks, including adversarial robustness testing and fairness audits. Pre-deployment conformity checks become gatekeeping mechanisms, where independent third-party verifiers assess compliance against regulatory frameworks like the EU AI Act. Integrating these into CI/CD pipelines via DevSecOps ensures automated safety tests run alongside functional ones, preventing non-compliant code from advancing. Post-market monitoring involves continuous surveillance for drift or incidents, with automated reporting to meet compliance deadlines.

Quantified Operational Impacts

The introduction of independent verification adds overhead to AI product development operations, with industry benchmarks indicating measurable increases in testing cycles, person-hours, and time-to-market. According to a 2023 McKinsey report on AI governance, organizations implementing enhanced safety testing see an average 30% increase in development cycle time due to iterative verification loops. A Gartner study from 2024 estimates additional costs of $500,000 to $2 million per AI project for compliance-related activities, depending on system complexity.

These impacts vary by sector; for instance, in finance, FCA guidance on algorithmic trading requires quarterly independent audits, leading to 15-25% more engineering hours. Assumptions for these estimates include mid-sized AI deployments (10-50 developers) and adoption of tools like MLflow for traceability, without full automation of verification processes.

Quantified Operational Impact Estimates with Assumptions

| Impact Area | Estimated Change | Assumptions | Source |

|---|---|---|---|

| Testing Cycles | 20-50% increase | Manual and automated safety tests added to existing QA; assumes partial CI/CD integration | Gartner 2024 AI DevOps Report |

| Person-Hours per Project | 200-600 additional hours | For design risk assessments and pre-deployment audits; mid-sized team of 20 engineers | McKinsey 2023 AI Governance Study |

| Time-to-Market | 3-6 months delay | Due to conformity checks and vendor verification; high-risk AI systems in regulated sectors | Deloitte 2024 AI Compliance Survey |

| CI/CD Pipeline Runtime | 15-30% longer build times | Integration of safety test suites; assumes open-source tools like TensorFlow Extended | Forrester 2023 DevSecOps for AI |

| Post-Market Monitoring Costs | 10-20% of annual ops budget | Ongoing incident reporting and drift detection; EU AI Act compliance for general-purpose AI | EU AI Act Impact Assessment 2023 |

| Overall Project Budget Increase | 25-40% | Including third-party verification fees; assumes no prior compliance infrastructure | IDC 2024 AI Operations Report |

Supply-Chain and Vendor Management Effects

Independent verification extends to supply-chain partners, necessitating updates to vendor contracts and SLAs to enforce AI safety standards. Procurement teams must include clauses requiring vendors to provide evidence of independent verification, such as conformity certificates or audit logs, to mitigate risks of non-compliant components. This impacts business operations by introducing indemnities for liability in case of AI harms, potentially increasing negotiation times by 20-30% as per a 2024 PwC procurement study.

In RFPs for AI assurance services, specifications should mandate deliverables like detailed test reports and mappings to standards such as NIST AI RMF. Vendor management workflows shift to include periodic compliance reviews, ensuring alignment with compliance deadlines under regulations like the EU AI Act.

- Contractual clauses for independent verification of vendor AI models, including third-party audit requirements.

- SLAs specifying response times for safety incident reporting (e.g., within 72 hours).

- Evidence deliverables such as raw test data, bias metrics, and robustness scores.

- Indemnities covering penalties from regulatory non-compliance attributable to vendor components.

Checklist for Integrating Independent Verification into DevSecOps Workflows

To adapt engineering and product teams' processes, integrate independent verification seamlessly into DevSecOps pipelines. This involves shifting left on safety—embedding checks early—and automating where possible to minimize disruptions. Realistic cost impacts include a one-time setup of 500-1,000 engineering hours for pipeline reconfiguration, with ongoing 10-15% efficiency gains from automation, based on case studies from Google's Responsible AI Practices (2023). Time impacts can be offset by parallelizing tests, reducing overall delays to 10-20% post-maturity.

- Assess current DevSecOps maturity: Map existing pipelines to AI safety requirements using NIST AI RMF profiles.

- Embed risk assessments in design: Use tools like OWASP AI Security for initial threat modeling during sprint planning.

- Automate safety testing in CI/CD: Integrate frameworks like Adversarial Robustness Toolbox into build stages; gate releases on passing independent verification APIs.

- Implement pre-deployment conformity checks: Require third-party scans via services like Credo AI before staging environments.

- Enable post-market monitoring: Set up dashboards with ML observability tools (e.g., WhyLabs) for real-time drift detection and automated regulatory reporting.

- Train teams and document: Conduct quarterly workshops on compliance deadlines; maintain audit trails with version control for all AI artifacts.

- Review and iterate: Quarterly audits to measure integration success, targeting <5% pipeline failure rate due to safety issues.

Success criteria include 100% coverage of high-risk AI components by independent verification and adherence to compliance deadlines without project delays exceeding 15%.

Sample Contractual Language for Procurement and RFPs

For AI product development involving vendors, incorporate these sample clauses into contracts and RFPs to enforce independent verification. These are derived from templates in the EU AI Act guidance and industry standards like ISO/IEC 42001, ensuring practical enforceability while addressing vendor accountability.

- The Vendor shall provide independent third-party verification of all AI components, certified against ISO/IEC 42001 or equivalent standards, prior to delivery.

- Deliverables must include a conformity assessment report detailing testing methodologies, results, and mappings to regulatory requirements (e.g., EU AI Act high-risk annexes).

- The Vendor agrees to indemnify the Buyer against any fines or liabilities arising from non-compliance with AI safety obligations, up to [specify amount] or full project value.

- SLAs shall require evidence of ongoing post-market monitoring, with quarterly reports on AI performance metrics and incident logs, submitted within 30 days of quarter-end.

Gap analysis, risk assessment and compliance maturity model

This section provides a structured framework for conducting a gap analysis and compliance maturity assessment to evaluate organizational readiness for regulatory independent verification requirements in AI governance. It introduces a 5-level maturity model, a scoring rubric, scenario-based risk assessments, and a remediation roadmap to prioritize actions and reduce enforcement risks.

Organizations deploying AI systems must perform a thorough gap analysis to identify deficiencies in their compliance maturity, particularly for independent verification mandates under frameworks like the EU AI Act. This assessment focuses on key areas: people, processes, data, testing infrastructure, documentation, and third-party management. By quantifying current state against regulatory requirements, businesses can calculate a readiness score and develop targeted remediation plans. This approach draws from established models such as COBIT 2019 for governance and NIST AI Risk Management Framework (AI RMF 1.0), adapted for AI verification needs.

Measuring current maturity involves self-assessment using the rubric below, where each area is scored on a 1-5 scale corresponding to maturity levels. Aggregate scores provide an overall readiness percentage, with weights reflecting risk impact (e.g., processes and documentation at 25% each, others at 12.5%). A score below 60% indicates high enforcement risk, necessitating immediate action. Remediation steps prioritizing high-impact areas, such as process formalization, can reduce risk by up to 40% based on COBIT case studies in tech compliance.

The highest risk reduction comes from elevating processes and documentation to level 3 (Defined), as these underpin verifiable audits. For instance, implementing standardized verification protocols can mitigate 70% of common deficiencies identified in NIST analyses of AI governance failures.

- Conduct initial inventory of AI systems and map to regulatory categories (e.g., high-risk under EU AI Act).

- Assemble cross-functional team including compliance, IT, and legal experts for unbiased scoring.

- Review evidence against criteria, assigning scores per area and calculating weighted total.

- Prioritize gaps by risk score, focusing on those exceeding 20% impact on overall readiness.

Maturity Model Criteria

| Maturity Level | People | Processes | Data | Testing Infrastructure | Documentation | Third-Party Management |

|---|---|---|---|---|---|---|

| Level 1: Initial | Ad-hoc roles with no dedicated AI compliance expertise; reliance on general IT staff. | Reactive, undocumented verification; no standardized workflows. | Unstructured data silos; no governance for AI datasets. | Basic tools without automation; manual testing only. | Minimal or absent records; no audit trails. | Informal vendor selection; no contracts or oversight. |

| Level 2: Repeatable | Basic training for key personnel; some designated responsibilities. | Repeatable but inconsistent processes for low-risk verifications. | Basic data cataloging; partial lineage tracking. | Dedicated testing environments for core systems; semi-automated scripts. | Basic policies documented; some verification logs. | Vendor agreements in place; periodic reviews initiated. |

| Level 3: Defined | Dedicated compliance team with AI-specific training; clear roles defined. | Standardized processes across organization; verification workflows formalized. | Comprehensive data governance; full lineage and quality controls. | Integrated testing platforms with automation; CI/CD pipelines for verification. | Detailed documentation standards; comprehensive audit-ready records. | Formal third-party risk assessments; SLAs and monitoring protocols. |

| Level 4: Managed | Advanced skills development; metrics-driven performance for compliance staff. | Quantified and controlled processes; KPIs for verification efficiency. | Data analytics for ongoing quality; automated lineage tools. | Scalable infrastructure with AI-driven testing; real-time monitoring. | Dynamic documentation management; version control and updates. | Integrated vendor management system; proactive risk mitigation. |

| Level 5: Optimized | Continuous learning culture; innovation in compliance practices. | Continuously improving processes; AI-enhanced verification automation. | Predictive data governance; synthetic data integration for privacy. | Fully automated, resilient infrastructure; adaptive testing frameworks. | Intelligent documentation systems; predictive compliance insights. | Strategic third-party partnerships; co-innovation for verification. |

Scoring Rubric and Worksheet

| Area | Current Score (1-5) | Weight (%) | Weighted Score | Gap Analysis Notes | Priority Remediation |

|---|---|---|---|---|---|

| People | 12.5 | E.g., Lack of training: Score 2 | Hire specialist (3 months, 2 FTE) | ||

| Processes | 25 | E.g., No standards: Score 1 | Define workflows (6 months, 3 FTE) | ||

| Data | 12.5 | E.g., Poor lineage: Score 2 | Implement tools (4 months, 2 FTE) | ||

| Testing Infrastructure | 12.5 | E.g., Manual only: Score 1 | Automate pipelines (9 months, 4 FTE) | ||

| Documentation | 25 | E.g., Incomplete: Score 2 | Standardize templates (3 months, 1 FTE) | ||

| Third-Party Management | 12.5 | E.g., No SLAs: Score 1 | Assess vendors (2 months, 1 FTE) | ||

| Overall Readiness Score | =SUM(Weighted Scores) | 100 | Target: >80% for low risk |

Remediation Roadmap Template

| Phase | Key Actions | Timeline | Resources | Estimated Cost | KPIs |

|---|---|---|---|---|---|

| Phase 1: Assessment | Complete gap analysis; score maturity levels. | 0-3 months | 1 PM, 2 analysts | $50k (tools/licenses) | 100% areas assessed; baseline score documented |

| Phase 2: Quick Wins | Address documentation and people gaps; basic training. | 3-6 months | 2 trainers, 1 compliance lead | $75k (training/vendor) | Level 2 achieved in 2 areas; 20% score improvement |

| Phase 3: Core Build | Formalize processes and data governance; integrate testing tools. | 6-12 months | 3 developers, 2 data experts | $150k (software/infra) | Level 3 in processes/data; 40% risk reduction |

| Phase 4: Optimization | Advanced automation and third-party oversight; continuous monitoring. | 12-18 months | 4 engineers, 1 auditor | $100k (ongoing services) | Level 4+ overall; >80% readiness score |

| Phase 5: Sustain | Annual reviews; adapt to new regs. | Ongoing | Dedicated team | $30k/year | Audit pass rate 95%; zero major findings |

Use the scoring worksheet to calculate readiness: Overall Score = Σ(Score × Weight). Scores <40% signal immediate high-risk exposure; aim for progressive elevation to reduce enforcement fines by 50-70%, per NIST enforcement analyses.

Deficiencies in third-party management amplify risks in AI supply chains; unverified vendors contributed to 60% of recent EU AI Act violations in case studies.

Organizations reaching Level 3 maturity report 35% faster compliance audits and 25% lower operational costs, based on COBIT 2019 implementations.

Scenario-Based Risk Assessments

Low-Risk Scenario: A mid-sized firm at Level 2 maturity deploys a low-risk AI chatbot with repeatable but inconsistent verification processes. Gap: Incomplete documentation leads to minor audit findings. Enforcement Risk: Low (5-10% fine probability under EU AI Act); Business Impact: $50k remediation cost, 1-month delay in deployment. Mitigation: Standardize docs to achieve Level 3, reducing risk to negligible.

Medium-Risk Scenario: An enterprise at Level 1-2 uses high-risk AI for credit scoring without defined data governance or third-party oversight. Gap: Untracked data biases and unverified vendor models result in fairness violations. Enforcement Risk: Medium (30-50% chance of €10M fine); Business Impact: Reputational damage, class-action lawsuits costing $5M+, operational halt for 6 months. Mitigation: Prioritize data and processes to Level 3, yielding 50% risk reduction via bias testing protocols.

High-Risk Scenario: A global tech company at Level 1 operates without any structured AI governance, relying on ad-hoc testing for prohibited-risk systems. Gap: No independent verification exposes systemic non-compliance. Enforcement Risk: High (70%+ ban probability, €35M+ fines); Business Impact: Market share loss of 15-20%, stock drop 10%, full product recall. Mitigation: Accelerate to Level 4 in all areas within 12 months, preventing 80% of potential impacts through automated compliance platforms.

Prioritizing Remediation for Risk Reduction

Remediation prioritizes based on weighted gaps and risk multipliers (e.g., high-risk AI systems ×2). Steps yielding highest reduction: 1) Process definition (40% impact, 6-month timeline); 2) Documentation overhaul (30% impact, 3 months); 3) Third-party audits (20% impact, 4 months). Quantified outcomes from similar COBIT adaptations show 60% average risk drop post-implementation.

- Identify top 3 gaps by weighted score deficit.

- Assign owners and resources per roadmap.

- Monitor progress quarterly against KPIs.

- Reassess maturity post-remediation to validate improvements.

Roadmap and phased implementation with deadlines and deliverables

This compliance roadmap outlines a structured, phased approach to achieving independent verification compliance under AI regulation frameworks like the EU AI Act. It integrates key regulatory deadlines, assigns responsibilities, and provides pragmatic checklists to ensure timely preparation. Tailored for adaptation based on company size and jurisdiction, the roadmap emphasizes implementation deadlines for legal, compliance, and product teams.

Developing a compliance roadmap is essential for navigating the complexities of AI regulation. This plan breaks down the preparation for independent verification into four phases: Immediate (0–3 months), Near-term (3–9 months), Mid-term (9–18 months), and Long-term (18–36 months). Each phase includes specific deliverables, responsible owners, resource estimates, key performance indicators (KPIs), and documentation artifacts. Regulatory deadlines, such as the EU AI Act's phased enforcement starting August 2, 2025, for general-purpose AI models and August 2, 2027, for high-risk systems, are translated into internal milestones. For US and UK jurisdictions, align with emerging guidelines from NIST and the UK's AI Safety Institute, targeting voluntary compliance by mid-2025. Success is measured by clear checklists, role-specific responsibilities, and an escalation matrix for board reporting. Contingency actions include resource reallocation for missed deadlines, with escalation templates provided.

What must be completed in 90 days? Conduct a comprehensive gap analysis, establish a cross-functional compliance team, and initiate risk inventory to baseline current maturity against regulatory requirements. Milestones align with EU enforcement calendars (e.g., prohibited AI systems banned from February 2, 2025) and US executive orders on AI safety (ongoing from October 2023). For smaller companies, scale resources downward; larger firms may require dedicated compliance officers. Research from NIST AI Risk Management Framework and industry playbooks (e.g., Deloitte's AI governance guides) informs this pragmatic approach, ensuring the roadmap supports independent verification while optimizing for efficiency.

Phased Compliance Roadmap

| Phase | Key Deliverables | Responsible Owners | KPIs | Timelines (Aligned to Regulations) |

|---|---|---|---|---|

| Immediate (0-3 Months) | Gap analysis, risk inventory, verifier selection | Compliance/Legal/Product | 100% inventory coverage; baseline maturity score | By Q1 2025; pre-EU prohibited ban (Feb 2025) |

| Near-term (3-9 Months) | Pilot verification, evidence repository | Product/IT/Compliance | 80% pilot pass; 50% systems in repo | Q2-Q3 2025; align EU GPAI obligations (Aug 2025) |

| Mid-term (9-18 Months) | Full assessments, staff training | Product/HR/Compliance | 90% verified systems; 95% training completion | Q4 2025-Q3 2026; prep for EU high-risk (Aug 2027) |

| Long-term (18-36 Months) | Recertifications, monitoring | All Teams/Legal | Maturity level 4-5; 100% audit pass | 2026-2028; ongoing US/UK adaptations |

| Contingency Row | Resource reallocation, extended pilots | Exec/Board | Delay mitigation <30 days | As needed per escalation |

| US-Specific Milestone | NIST framework integration | Compliance | Voluntary compliance achieved | By mid-2025 per EO 14110 |

| UK-Specific Milestone | AI Safety Institute alignment | Legal | Pilot conformity report | Q1 2026 per proposed regime |

This roadmap ensures a clear path to AI regulation compliance, with built-in flexibility for independent verification.

Immediate Phase (0–3 Months): Foundation Building

Focus on rapid assessment and team setup to address urgent regulatory gaps. This phase ensures foundational compliance readiness ahead of early EU AI Act deadlines, such as the ban on prohibited systems by February 2, 2025.

- Deliverables: Complete gap analysis using a 5-level maturity model (adapted from NIST AI RMF); develop risk inventory for high-risk AI systems; select initial independent verifier candidates.

- Responsible Owners: Compliance Lead (overall), Legal Team (regulatory mapping), Product Team (system inventory).

- Resource Estimates: 2-4 FTEs (full-time equivalents), $50K-$100K budget for consulting/tools; scale to 1-2 FTEs for small firms.

- KPIs: 100% coverage of AI inventory; maturity score baseline established; 3+ verifier RFPs issued.

- Documentation Artifacts: Gap analysis report, risk register spreadsheet, verifier selection criteria document.

- Checklist: Verify jurisdiction-specific dates (e.g., EU: confirm via official gazette); conduct scenario-based risk assessments (low/medium/high impact); prioritize remediation based on enforcement timelines.

Near-term Phase (3–9 Months): Planning and Pilots

Build on foundations with detailed planning and initial testing. Align with EU GPAI obligations starting August 2, 2025, and US NIST guidelines rollout by Q2 2025.

- Deliverables: Finalize independent verifier selection; launch pilot verification for one high-risk system; deploy basic evidence repository.

- Responsible Owners: Compliance Lead (verifier contracts), Product Team (pilot execution), IT/Security (repository setup).

- Resource Estimates: 4-6 FTEs, $150K-$300K including vendor onboarding (typically 4-6 weeks per Deloitte benchmarks).

- KPIs: Pilot completion with 80% pass rate; evidence repository operational for 50% of systems; training sessions for 20+ staff.

- Documentation Artifacts: Verifier contract, pilot report with findings, staff training modules.

Contingency for Missed Deadlines: If pilot delays occur, reallocate 20% more resources and extend by 30 days; escalate to executive team if >45 days overdue.

Mid-term Phase (9–18 Months): Full Implementation

Scale verification across portfolio, targeting full conformity by EU high-risk deadlines (August 2, 2027) and UK AI regime pilots (expected 2026).

- Deliverables: Conduct full conformity assessments; integrate automation for evidence management; complete staff training programs.

- Responsible Owners: Product Team (assessments), Compliance Lead (automation oversight), HR (training rollout).

- Resource Estimates: 6-10 FTEs, $400K-$800K with Sparkco-like tools for efficiency gains (up to 50% per benchmarks).

- KPIs: 90% systems verified; zero major non-conformities; training completion rate >95%.

- Documentation Artifacts: Conformity certificates, automated reporting dashboards, training certification logs.

Long-term Phase (18–36 Months): Optimization and Monitoring

Embed compliance into operations, preparing for evolving regulations like potential US AI Act by 2028. Monitor policy signposts from OECD reports.

- Deliverables: Annual recertifications; advanced risk modeling; cross-border compliance adaptations.

- Responsible Owners: Compliance Lead (monitoring), Legal (policy updates), All Teams (ongoing integration).

- Resource Estimates: 3-5 FTEs ongoing, $200K/year maintenance.

- KPIs: Maturity level 4-5 achieved; audit pass rate 100%; adaptability score >90%.

- Documentation Artifacts: Annual compliance reports, scenario planning documents, escalation logs.

Escalation Matrix and Contingency Planning

To ensure accountability, use this matrix for board-level reporting. Adapt timelines to company size—e.g., startups focus on essentials within 12 months.

Escalation Matrix

| Issue Level | Trigger | Action | Owner | Timeline |

|---|---|---|---|---|

| Low | Minor delay (<15 days) | Internal reallocation | Team Lead | Immediate |

| Medium | Milestone miss (15-45 days) | Escalate to Exec | Compliance Lead | Within 5 days |

| High | Regulatory deadline breach | Board report with remediation | Legal/Compliance | Within 24 hours |

Escalation Template: 'Issue: [Describe]. Impact: [Regulatory risk]. Proposed Action: [Steps]. Deadline: [Date].' Send via secure channel to board.

Automation opportunities and Sparkco use cases for compliance management and reporting

Automation in compliance management offers repeatable processes, robust audit trails, efficient evidence collating, and streamlined policy analysis, reducing manual effort and enhancing accuracy in regulatory reporting. Sparkco's platform supports these through targeted use cases, delivering measurable efficiency gains backed by industry benchmarks.

In the evolving landscape of regulatory compliance, automation addresses key challenges by ensuring consistency, minimizing human error, and accelerating workflows. For instance, automated systems can reduce the time to assemble compliance evidence by up to 70%, according to benchmarks from Deloitte's compliance automation reports. This not only lowers operational costs but also mitigates risks associated with non-compliance, such as fines or delays in product launches. Sparkco, a specialized platform for compliance automation, integrates seamlessly to support these benefits, enabling organizations to focus on strategic priorities rather than repetitive tasks.

Sparkco Use Cases for Compliance Management and Regulatory Reporting

Sparkco provides practical automation solutions tailored to compliance needs. Below are four concrete use cases, each designed to streamline specific aspects of compliance management. These are based on Sparkco's documented capabilities in evidence management and policy analysis, with estimated outcomes drawn from similar implementations in tech firms.

Use Case 1: Automated Ingestion and Normalization of Regulator Guidance and Timelines

This use case automates the collection and standardization of regulatory updates, ensuring teams stay ahead of deadlines. Inputs include regulator APIs, PDFs, and RSS feeds; outputs are normalized databases with searchable timelines and automated alerts. High-level architecture involves data ingestion pipelines feeding into Sparkco's normalization engine, outputting structured JSON for integration with GRC tools.

Estimated implementation time: 2-4 weeks, assuming access to source data. Sample KPIs include a 80% reduction in manual update time (from 20 hours/week to 4 hours, per Gartner benchmarks) and 95% accuracy in timeline extraction. Roles impacted: compliance officers and legal teams, who gain faster access to verified guidance.

For ROI, assuming a mid-sized firm processes 50 updates annually, automation saves 800 hours at $100/hour, yielding $80,000 in efficiency gains (assumptions: standard labor rates, no additional headcount).

Use Case 2: Evidence Repository and Immutable Audit Trail for Independent Verification Reports

Sparkco creates a secure repository for verification artifacts, maintaining an immutable audit trail using blockchain-inspired logging. Inputs are upload files like test reports and certifications; outputs include queryable logs and exportable audit summaries for regulators.