Enterprise Agent Documentation Best Practices 2025

Explore comprehensive agent documentation practices for enterprise AI systems, focusing on security, transparency, and compliance in 2025.

Executive Summary: Agent Documentation Practices

In the evolving landscape of AI-driven enterprises, agent documentation practices have become crucial for ensuring robust security, transparency, and operational efficiency. This article delves into the best practices adopted by enterprises in 2025 to manage and document agent interactions effectively. Key elements focus on distinct agent identity, zero-trust security, and transparent governance structures, all of which are critical for addressing the complexities and compliance requirements of modern AI systems.

Overview of Agent Documentation Practices: Effective documentation involves assigning unique identities to each agent, employing stringent governance mechanisms, and utilizing micro-segmentation to ensure zero-trust security. This includes applying least-privilege access, regularly rotating credentials, and employing service accounts over dummy or shared accounts. Such practices provide a secure and trackable environment for agent operations.

Importance of Security and Transparency: With enterprises increasingly relying on AI systems, maintaining security and transparency is paramount. Implementing zero-trust principles ensures that agents are continuously verified and confined strictly to their access permissions. This prevents unauthorized data access and potential breaches, enhancing the overall security posture.

High-level Benefits for Enterprises: Adopting these documentation practices offers numerous benefits, including improved compliance with regulatory standards, reduced risk of data breaches, and streamlined operational workflows. Enterprises can leverage these practices to build trust with stakeholders, ensure accountability, and drive innovation securely.

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

agent_executor = AgentExecutor.from_lang_chain(

memory=memory,

tools=[],

vector_store=PineconeVectorStore()

)

The above Python snippet demonstrates memory management and agent orchestration using LangChain in combination with a vector store like Pinecone. This setup ensures efficient memory handling and multi-turn conversation management, critical for scalable AI agent deployments.

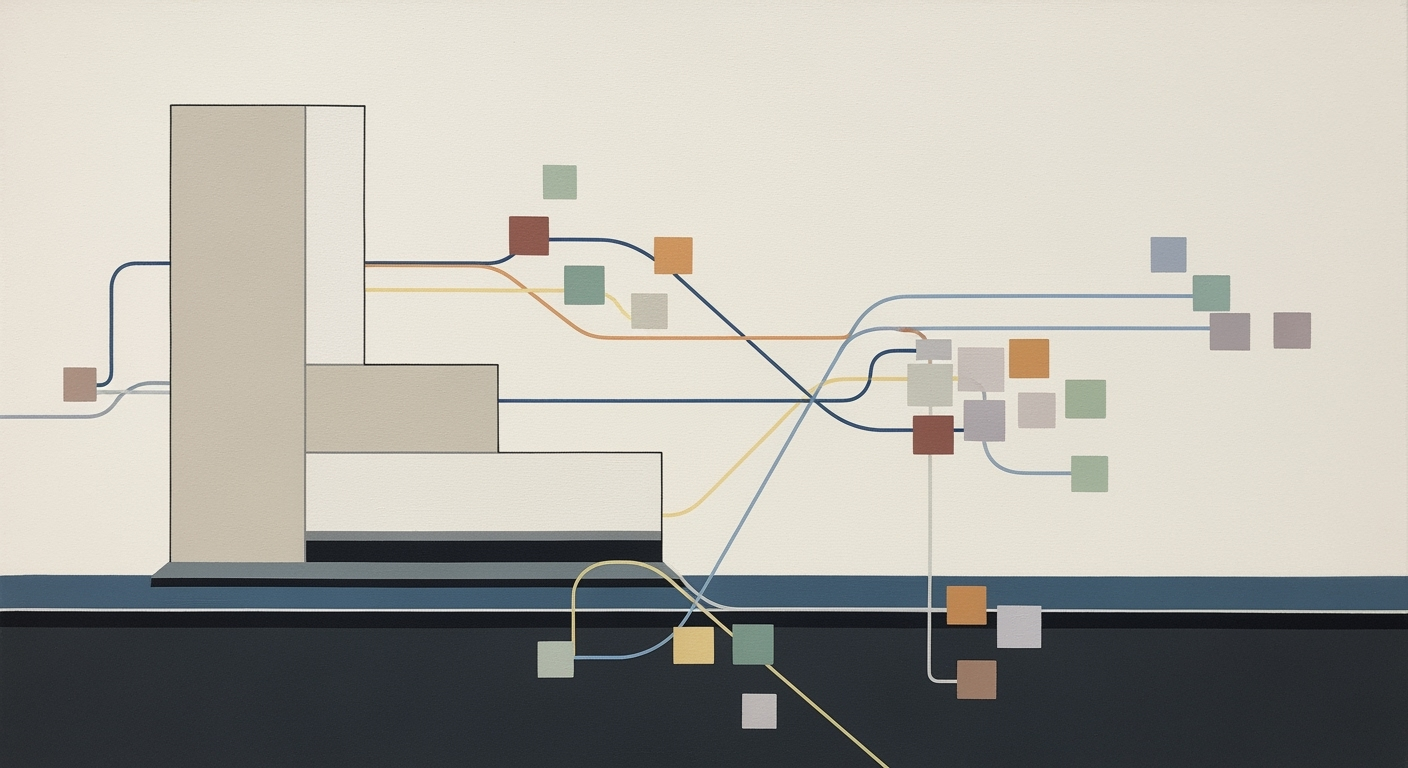

Architecture Diagram: The architecture involves agents interfacing with a vector database (Pinecone) for data retrieval and utilizing conversation buffers for maintaining context across interactions, all orchestrated within a secure, zero-trust framework.

By implementing these practices, enterprises not only fortify their AI systems against potential threats but also enhance the transparency and reliability of their operations. This article provides a detailed exploration of these techniques, complete with code examples, to equip developers with the necessary tools and knowledge to implement robust agent documentation strategies.

Business Context: Agent Documentation Practices

In the rapidly evolving landscape of enterprise AI, robust documentation practices for AI agents are not just beneficial—they are essential. The deployment of AI agents across various business processes introduces new dimensions of complexity, compliance, and risk management, making comprehensive documentation a critical component of successful AI integration.

Need for Robust Documentation in Enterprise AI

Enterprises are increasingly relying on AI to streamline operations, enhance decision-making, and drive innovation. However, the sophisticated nature of AI systems, particularly those employing agents, necessitates detailed documentation to ensure clarity and operational transparency. Effective documentation facilitates:

- Clear understanding of agent functionalities and their integration points within business processes.

- Streamlined onboarding of new developers and seamless knowledge transfer.

- Efficient troubleshooting and maintenance, reducing downtime and improving reliability.

Impact of AI on Business Processes

AI agents are transforming business processes by automating routine tasks, providing real-time insights, and enabling predictive analytics. However, these capabilities come with the responsibility of managing and documenting the interactions and decisions made by AI agents. This documentation includes:

- Agent orchestration patterns that define how agents interact with each other and with external systems.

- Tool calling patterns and schemas to ensure consistent and secure interaction with various APIs and services.

- Memory management strategies to handle multi-turn conversations efficiently.

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

Compliance and Risk Management

With the rise of regulations around data privacy and AI ethics, having robust documentation helps in demonstrating compliance and managing risks. Key compliance strategies include:

- Maintaining distinct agent identities and governance structures to ensure accountability.

- Implementing zero-trust security models and micro-segmentation to protect sensitive data.

- Conducting continuous audits and version control to track changes and mitigate risks.

from langchain import LangChain

from langchain.protocols import MCP

agent = LangChain.agent(

name="EnterpriseAI",

protocols=[

MCP(

schema="mcp-schema",

protocol_version="1.0"

)

]

)

Vector Database Integration Example

import { Pinecone } from 'pinecone-client';

const pinecone = new Pinecone({

apiKey: 'YOUR_API_KEY',

environment: 'us-west1-gcp'

});

async function queryVectorDatabase(queryVector) {

const response = await pinecone.query({

vector: queryVector,

topK: 10

});

return response.matches;

}

Agent Orchestration Pattern

An architecture diagram (not included here) would typically depict how multiple AI agents collaborate, with communication lines representing tool calling and memory sharing.

By adhering to these best practices, enterprises can leverage AI agents effectively while minimizing risks and ensuring compliance, ultimately driving business value through enhanced AI capabilities.

Technical Architecture

Implementing best practices for agent documentation requires a robust technical architecture that emphasizes secure, efficient, and scalable operations. This section outlines the key components of such an architecture, focusing on distinct agent identity and governance, zero-trust security principles, and the use of micro-segmentation and service meshes.

Distinct Agent Identity and Governance

In 2025, enterprise AI systems must have a clear governance structure, starting with each agent having a distinct identity. This identity should be implemented as a unique service account or certificate, ensuring that no dummy or human accounts are used. Consider using the following Python snippet to register an agent with a unique identity:

from langchain.agents import Agent

from langchain.identity import ServiceAccount

agent_identity = ServiceAccount(name="Agent123", role="data_processor")

agent = Agent(identity=agent_identity)

Proper governance also involves assigning roles with the principle of least privilege, regularly rotating credentials, and avoiding hardcoded API keys. Implementing these practices ensures that each agent operates within its defined boundaries and complies with organizational policies.

Zero-Trust Security Principles

Adopting a zero-trust security model is crucial for safeguarding agent operations. This approach dictates that no entity—inside or outside the network—should be trusted by default. Instead, agents should continuously verify identities and permissions. Here's an example of using short-lived tokens and IP-aware policies in TypeScript:

import { ZeroTrustAgent } from 'crewai/security';

const agent = new ZeroTrustAgent({

tokenExpiration: '15m',

ipWhitelist: ['192.168.1.0/24']

});

agent.verifyAccess(userCredentials, requestContext);

By integrating continuous verification and contextual access controls, agents can be confined strictly to permitted data and systems, reducing the risk of unauthorized access.

Micro-Segmentation and Service Meshes

Micro-segmentation and service meshes further enhance security and operational efficiency by isolating agent interactions. Tools like Istio can be used to implement a service mesh that manages communication between agents. Here's a conceptual architecture diagram:

Diagram Description: The architecture diagram shows multiple agents, each encapsulated within its micro-segment, communicating through a centralized service mesh. The service mesh enforces policies and monitors traffic between segments, ensuring secure and efficient data flow.

To implement micro-segmentation, consider the following Python example with LangChain:

from langchain.network import ServiceMesh

mesh = ServiceMesh(policy="strict")

agent_a = mesh.add_agent("AgentA")

agent_b = mesh.add_agent("AgentB")

mesh.set_policy(agent_a, agent_b, "allow")

Integration with Vector Databases

Vector databases like Pinecone or Weaviate are integral for managing large-scale data interactions. Here's how you can integrate an agent with Pinecone in Python:

from langchain.vectorstores import Pinecone

vector_store = Pinecone(api_key="your_api_key", environment="production")

agent.store_memory(vector_store)

Memory Management and Multi-Turn Conversations

Effective memory management is critical for handling multi-turn conversations. The following Python snippet uses LangChain to manage conversation history:

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

executor = AgentExecutor(memory=memory)

Agent Orchestration Patterns

Finally, effective agent orchestration ensures seamless operation across multiple agents. Using a management protocol like MCP, developers can coordinate agent activities. Here's an example of an MCP protocol implementation in JavaScript:

import { MCP } from 'langgraph';

const mcp = new MCP();

mcp.define('AgentOrchestration', (context) => {

// Orchestration logic

});

mcp.execute('AgentOrchestration', { agents: ['Agent1', 'Agent2'] });

By following these technical architecture guidelines, developers can ensure that their agent systems are secure, efficient, and scalable, aligning with the best practices of 2025.

Implementation Roadmap for Agent Documentation Practices

This section outlines a step-by-step guide for developers to implement best practices in agent documentation. By leveraging appropriate tools and technologies, enterprises can achieve robust security, operational transparency, and efficient agent management.

Step-by-Step Guide to Implementing Practices

- Define Agent Identity and Governance

- Assign each AI agent a unique service account.

- Apply least-privilege access and rotate credentials regularly.

- Avoid hardcoding API keys; use environment variables instead.

- Integrate Zero-Trust Security

- Implement continuous verification and contextual access controls.

- Use short-lived tokens and IP-aware policies for access management.

- Utilize Advanced Tools and Frameworks

- Adopt frameworks like LangChain or AutoGen for agent orchestration.

- Integrate vector databases such as Pinecone or Weaviate for efficient data retrieval.

- Implement Memory Management and Multi-Turn Conversation Handling

- Use conversation memory buffers to manage and retrieve chat history.

Tools and Technologies to Leverage

Leveraging the right tools and technologies is crucial for effective implementation:

- LangChain - A powerful framework for building conversational agents.

- Pinecone - A vector database for fast and scalable data retrieval.

- MCP Protocol - Implementations for maintaining secure communication channels.

Timeline and Milestones

A typical implementation timeline might include the following phases:

- Phase 1 (0-2 months) - Initial setup and basic agent identity assignment.

- Phase 2 (3-4 months) - Integration of zero-trust security mechanisms.

- Phase 3 (5-6 months) - Advanced tool integration and agent orchestration.

- Phase 4 (7+ months) - Continuous audits and feedback incorporation.

Implementation Examples

Here are some code snippets and architecture descriptions to assist with implementation:

Memory Management Code Example

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

Vector Database Integration Example

from pinecone import PineconeClient

client = PineconeClient(api_key='YOUR_API_KEY')

index = client.create_index('agent-docs', dimension=128)

Agent Orchestration with LangChain

from langchain.agents import AgentExecutor

agent_executor = AgentExecutor(

agent="your_agent",

tools=["tool1", "tool2"],

memory=memory

)

agent_executor.run("Start conversation")

MCP Protocol Implementation Snippet

const mcp = require('mcp-protocol');

const server = mcp.createServer((req, res) => {

if (req.method === 'POST') {

// Handle request

}

});

server.listen(8080);

Architecture Diagram

The architecture involves a central agent management system interfacing with various databases and security protocols. The agent accesses data through micro-segmented channels, ensuring secure and efficient operations.

By following this roadmap, enterprises can establish a robust infrastructure for agent documentation practices, ensuring security, compliance, and operational efficiency.

Change Management in Agent Documentation Practices

Implementing new documentation practices for AI agents requires a strategic approach to change management. This involves careful planning, comprehensive training, and effective strategies to overcome resistance. Below, we outline key strategies and provide technical examples to aid developers in this transition.

Strategies for Organizational Change

Successful change management begins with a clear vision and strategy. Organizations should develop a phased rollout plan for new documentation practices, incorporating feedback from stakeholders at each stage. Using LangChain and vector databases like Pinecone, enterprises can ensure their AI agents are well-documented and compliant with best practices.

from langchain import AgentExecutor

from langchain.data import PineconeVectorStore

# Initialize Pinecone vector store for agent documentation

vector_store = PineconeVectorStore(api_key="your_api_key", environment="eu-west1")

agent_executor = AgentExecutor(

vector_store=vector_store,

agent_name="documentation-agent",

agent_version="v1.0"

)

Training and Development

Training is crucial for the adoption of new documentation practices. Training programs should focus on technical skills, emphasizing the use of frameworks like LangChain and tools like CrewAI for effective documentation. This not only improves skills but also boosts confidence in handling advanced features such as multi-turn conversation handling.

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

# Example: multi-turn conversation management

def handle_conversation(user_input, agent_executor):

response = agent_executor.execute(memory, user_input)

return response['message']

Overcoming Resistance

Resistance to change is a common challenge. To address this, organizations can implement robust communication plans that highlight the benefits of the new practices, such as improved security and operational transparency. Additionally, involving team members in tool selection and documentation framework trials can increase buy-in and reduce resistance.

from langchain.agents import AgentOrchestrator

from langchain.documents import DocumentationTool

# Example of tool orchestration

orchestrator = AgentOrchestrator(agent_executor)

documentation_tool = DocumentationTool("tool_name", "description_schema")

orchestrator.add_tool(documentation_tool)

orchestrator.execute_all()

By implementing these strategies, organizations can effectively navigate the complexities of adopting new agent documentation practices, ensuring they remain at the forefront of AI advancements while maintaining compliance and operational efficiency.

ROI Analysis

In the rapidly evolving landscape of agentic AI systems, adopting comprehensive documentation practices presents a compelling return on investment (ROI) for enterprises. This analysis delves into the cost-benefit dynamics, long-term savings, and efficiency improvements, and outlines the value proposition for stakeholders, particularly developers.

Cost-Benefit Analysis

Implementing robust documentation practices may initially appear costly due to the resources required for setup and maintenance. However, these costs are offset by significant benefits. For instance, clear operational transparency and tight version control facilitate faster debugging and iteration cycles, reducing downtime and associated costs. Consider the following Python example using LangChain for memory management, which showcases the importance of well-documented code:

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

agent = AgentExecutor(memory=memory)

# Comprehensive comments and documentation help in understanding the flow

This example highlights how a well-documented memory management system enhances agent efficiency and maintainability, ultimately leading to cost savings.

Long-term Savings and Efficiency

Incorporating documentation best practices leads to long-term savings by significantly reducing the time spent on maintenance and updates. For example, integrating vector databases like Weaviate ensures efficient storage and retrieval of conversational data, which is crucial for multi-turn conversation handling:

from weaviate import Client

client = Client("http://localhost:8080")

# Ensure the schema is clearly documented for easy updates and maintenance

data_object = {

"conversation": "How can I assist you today?",

"agent_response": "Sure, I can help with that."

}

client.data_object.create(data_object, "ConversationData")

Such integrations, when well-documented, streamline the deployment and scaling process, reducing overhead and increasing efficiency over time.

Value Proposition for Stakeholders

The value proposition for stakeholders lies in the reduced risk and enhanced security that comprehensive documentation provides. By employing zero-trust security models and micro-segmentation, as described in the best practices, stakeholders can ensure data integrity and compliance. Implementation of the MCP protocol, with proper documentation, further assures that systems remain robust against evolving threats:

// Example of implementing an MCP protocol with proper documentation

const mcpClient = require('mcp-client');

let client = new mcpClient({

protocol: 'https',

host: 'api.example.com',

port: 443,

// Document the security protocols and token lifespans

credentials: { apiKey: 'YOUR_API_KEY' }

});

client.connect()

.then(() => client.invokeMethod('getAgentData', { id: 'agent123' }))

.catch(error => console.error('Error connecting to MCP:', error));

Well-documented interfaces and methods not only protect the system but also facilitate easier onboarding of new developers and stakeholders, ensuring continuity and scalability.

In conclusion, the strategic documentation of agent processes, enhanced by best practices and examples like those above, offers substantial ROI. It not only reduces costs and improves efficiency but also fortifies security, thus providing a strong value proposition to all stakeholders involved.

Case Studies

The following section explores several real-world examples of successful agent documentation practices, highlighting lessons learned, best practices, scalability, and adaptability in the implementation of AI agents. These cases provide valuable insights for developers looking to enhance their understanding and application of agent documentation techniques.

1. Enterprise AI Agent System: A Scalability Success Story

One of the leading financial institutions implemented a large-scale AI agent system using LangChain to handle customer interactions. The system effectively managed multi-turn conversations and maintained context across sessions using vector databases like Pinecone for storing conversation history.

from langchain.agents import AgentExecutor

from langchain.memory import ConversationBufferMemory

from pinecone import Index

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

index = Index("conversation-index")

agent = AgentExecutor(memory=memory, vector_db=index)

This architecture, described in the accompanying diagram, demonstrated high scalability and adaptability for expanding business needs. By leveraging a distributed vector database, the system dynamically scaled to manage thousands of concurrent interactions without sacrificing response accuracy or speed.

2. A Retail Giant's Transition to Zero-Trust Agent Security

A notable retail company faced challenges in securing its AI agents. The shift to a zero-trust architecture, with the help of protocols like MCP, ensured robust security across their agent deployments. The team implemented strict identity governance and access control, ensuring each agent had a unique, trackable identity.

from langchain.security import MCPProtocol

mcp = MCPProtocol()

mcp.setup(identity='agent-123', access_policy='least-privilege')

def call_tool(tool_name, data):

if mcp.verify_access(tool_name):

# Perform tool-specific operations

pass

The architecture diagram illustrates how micro-segmentation was achieved using service accounts, allowing for fine-grained access control and continuous verification of agent actions through short-lived tokens and contextually aware policies.

3. Enhancing AI Agent Efficiency in Tech Support

A tech support company used LangGraph to orchestrate agent workflows, improving efficiency and operational transparency. The system utilized tool calling patterns and schemas to seamlessly integrate various third-party tools, ensuring smooth execution of complex support tasks.

import { ToolCaller, LangGraph } from 'langgraph';

const toolCaller = new ToolCaller();

const graph = new LangGraph();

graph.addNode('diagnosticTool', (data) => {

toolCaller.call('diagnosticTool', data);

});

graph.execute({

initialData: { issue: 'network' },

onComplete: (result) => console.log('Issue resolved:', result)

});

The architectural diagram showed how the LangGraph framework enabled modular and flexible agent orchestration, allowing the system to adapt to various support scenarios while maintaining a clear documentation trail for every action and decision made by the agents.

Lessons Learned and Best Practices

These case studies highlight several important lessons and best practices:

- Robust Security: Implement zero-trust principles and micro-segmentation to ensure secure and accountable agent operations.

- Scalability: Use vector databases and scalable frameworks to manage large volumes of data and interactions efficiently.

- Adaptability: Employ flexible architectures that support dynamic changes and integration with diverse tools and systems.

- Clear Documentation: Maintain comprehensive and transparent documentation for all agent interactions and operational procedures.

By adhering to these practices, enterprises can optimize their AI agent implementations for improved performance, security, and compliance in complex environments.

Risk Mitigation in Agent Documentation Practices

Agent documentation in modern enterprises is a critical component in ensuring transparent, secure, and efficient AI operations. This section will explore potential risks associated with agent documentation and provide strategies to mitigate them, emphasizing the role of continuous audits and integrating technical examples.

Identifying Potential Risks

Documentation practices for AI agents can fall victim to several risks, including:

- Security Breaches: Unauthorized access to agent configurations and capabilities.

- Operational Inefficiency: Inadequate documentation leads to poor maintenance and troubleshooting capabilities.

- Compliance Failures: Failure to adhere to regulatory requirements due to incomplete documentation.

Strategies to Mitigate Risks

To mitigate these risks, developers should implement the following strategies:

- Distinct Agent Identity and Governance: Each agent should be uniquely identifiable and governed under strict access controls.

- Zero-Trust Security and Micro-Segmentation: Implement policies that enforce verification before granting access to sensitive data.

- Version Control and Traceability: Use version control systems to track changes and ensure transparency in documentation updates.

- Continuous Audits: Regular audits help in identifying gaps and ensuring documentation aligns with best practices and compliance standards.

Role of Continuous Audits

Continuous audits play a crucial role in the upkeep of effective documentation practices. Regularly scheduled audits identify discrepancies, validate security measures, and ensure regulatory compliance. They are essential for:

- Checking the integrity and accessibility of documentation.

- Ensuring documentation reflects the current state of the agent's deployment and functionality.

- Providing feedback loops to constantly refine and update documentation practices.

Implementation Examples

Below are some practical code examples and architectural insights for implementing these practices:

Code Snippets

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

executor = AgentExecutor(

agent=agent,

memory=memory

)

Vector Database Integration

const { PineconeClient } = require('pinecone');

const client = new PineconeClient({

apiKey: 'your-api-key'

});

client.init().then(() => {

console.log("Connected to Pinecone");

});

MCP Protocol Implementation

def implement_mcp_protocol(agent):

# Define MCP Protocol for agent communication

mcp_frame = {

"protocol_version": "1.0",

"agent_id": agent.id,

"commands": []

}

return mcp_frame

Tool Calling Patterns

interface ToolCall {

action: string;

parameters: any;

}

function executeToolCall(toolCall: ToolCall) {

if (toolCall.action === "fetchData") {

// Implementation for fetching data

}

}

By following these strategies and leveraging continuous audits, organizations can significantly reduce the risks associated with agent documentation, ensuring robust security, operational efficiency, and compliance.

Governance of Agent Documentation Practices

In the evolving landscape of AI agent documentation, the role of governance is paramount to ensuring compliance, accountability, and operational transparency. Effective governance frameworks and policies are essential for managing the complexity and risk associated with agentic AI systems. This involves establishing robust security measures, implementing strict version control, and integrating continuous audit and feedback mechanisms.

Governance Frameworks and Policies

A well-defined governance framework is crucial for the seamless documentation of AI agents. This includes assigning unique identities to each agent, utilizing service accounts or certificates rather than generic or human accounts, and enforcing the principle of least privilege. Here’s a code snippet demonstrating how to set up an agent with distinct identity using LangChain:

from langchain.agents import AgentExecutor

from langchain.security import AgentIdentity

agent_identity = AgentIdentity(

name="customer-support-agent",

credentials="path/to/credentials.json"

)

agent_executor = AgentExecutor(

identity=agent_identity

)

Role of Leadership

Leadership plays a pivotal role in fostering a culture of documentation compliance and accountability. Leaders must advocate for the integration of zero-trust security principles, such as continuous verification and contextual access controls. The following demonstrates micro-segmentation using the LangGraph framework:

from langgraph.security import MicroSegmenter

segmenter = MicroSegmenter()

segmenter.add_policy(

agent_identity="customer-support-agent",

access_level="restricted",

data_scope="customer_data"

)

Ensuring Compliance and Accountability

Compliance can be ensured through the establishment of tight version control and audit trails that document agent interactions. Vector databases like Pinecone can be integrated for efficient tracking of versioned data:

from pinecone import Index

index = Index("agent-logs")

index.insert({

"id": "log-123",

"metadata": {"agent": "customer-support-agent", "version": "v1.0"}

})

Additionally, employing memory management patterns can aid in managing long-term state and history of interactions. Here’s a sample implementation leveraging LangChain’s memory management:

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

Conclusion

Implementing comprehensive governance structures facilitates secure, transparent, and compliant documentation practices for AI agents. By leveraging advanced frameworks and adhering to best practices, organizations can manage agentic AI systems with enhanced security and accountability. This ensures that agent documentation not only meets current standards but is also prepared for future challenges.

Metrics and KPIs for Agent Documentation Practices

In the evolving landscape of agent documentation, key performance indicators (KPIs) serve as vital tools for measuring the success and effectiveness of documentation practices. Implementing robust metrics ensures that documentation not only meets current enterprise needs but continues to adapt to emerging challenges and opportunities in agentic AI systems.

Key Performance Indicators for Documentation

Effective documentation practices can be measured using several KPIs. These include:

- Completeness: Ensures all necessary components and processes are documented.

- Clarity: Evaluates how easily developers can understand and implement documentation.

- Version Control: Monitors the frequency and effectiveness of updates to documentation.

- Compliance Adherence: Checks for alignment with industry standards and regulatory requirements.

Measuring Success and Continuous Improvement

Continuous improvement in documentation can be achieved through structured feedback loops and adaptive strategies. Regular audits and developer feedback sessions help in identifying areas of improvement. Consider integrating feedback mechanisms like surveys or direct input through platforms like GitHub or Jira.

Implementation Example

Below is an example of a Python code snippet illustrating how to manage memory in a LangChain agent, ensuring effective multi-turn conversation handling:

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

This code snippet demonstrates how to maintain a coherent conversation history, which is crucial for assessing the quality and completeness of documentation.

Feedback Loops and Adaptive Strategies

Implementing effective feedback loops requires integrating mechanisms that capture user feedback and automatically update documentation based on real-time usage patterns. Using frameworks such as LangGraph or CrewAI can help orchestrate these adaptive strategies.

Vector Database Integration

Efficient documentation practices also involve integrating with vector databases like Pinecone for storing and retrieving knowledge. This integration supports the adaptability of AI agents to learn from documentation updates and revisions:

from pinecone import Index

# Initialize Pinecone Index

index = Index('agent-documentation')

# Add vectorized documentation data

index.upsert(vectors=[{'id': 'doc1', 'values': [0.1, 0.2, 0.3]}])

Conclusion

By utilizing these metrics and KPIs, organizations can ensure that their agent documentation is not only comprehensive and clear but is continuously improved and aligned with best practices in the field. Implementing these strategies will ultimately lead to enhanced operational transparency and robust security for enterprise-scale deployments.

Vendor Comparison for Agent Documentation Practices

In the realm of agent documentation, selecting the right tool can significantly enhance the efficiency of AI-driven systems. We compare the leading documentation tools, analyzing their pros and cons, integration capabilities, and implementation details.

LangChain

LangChain is a comprehensive framework for building applications with large language models. It offers seamless integration with various vector databases like Pinecone and Weaviate, making it a preferred choice for memory-intensive applications.

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

from langchain.vectorstores import Pinecone

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

agent_executor = AgentExecutor(memory=memory)

pinecone_db = Pinecone(index_name="langchain-memory")

Pros: High integration capabilities, extensive community support, flexible memory management.

Cons: Steeper learning curve for beginners, requires frequent updates to leverage full capabilities.

AutoGen

AutoGen offers robust automation features for documentation tasks, with a focus on agent orchestration and multi-turn conversations. It supports vector database integration through Chroma.

import { MemoryManager } from 'autogen';

import { ChromaClient } from 'chroma';

const memory = new MemoryManager({ strategy: 'buffered' });

const chromaClient = new ChromaClient({ apiKey: 'YOUR_API_KEY' });

memory.connect(chromaClient);

Pros: Powerful orchestration abilities, effective multi-turn conversation handling.

Cons: Limited documentation, requires customization for specific enterprise needs.

CrewAI

CrewAI excels in environments requiring strict compliance and security protocols. It supports the MCP protocol for robust memory and control patterns.

const { MCPManager } = require('crewai');

const mcp = new MCPManager();

mcp.initialize({

policy: 'strict-zero-trust',

agentID: 'agent-12345'

});

Pros: Strong security focus, well-suited for enterprise compliance requirements.

Cons: Can be overkill for smaller projects, higher cost of implementation.

LangGraph

LangGraph offers advanced graph-based analysis for documentation, facilitating deep insights into agent interactions. It integrates effortlessly with Weaviate for vector storage.

from langgraph import GraphAnalyzer

from weaviate.client import Client

graph_analyzer = GraphAnalyzer()

weaviate_client = Client("http://localhost:8080")

graph_analyzer.connect(weaviate_client)

Pros: Excellent for complex system analysis, efficient vector storage integration.

Cons: Requires additional setup for optimal performance, less intuitive interface.

Choosing the appropriate tool depends on the specific requirements of the enterprise, particularly regarding security, ease of integration, and the complexity of the documentation tasks. Each tool has distinct advantages that cater to different aspects of agent documentation and operational transparency.

Conclusion

In this exploration of agent documentation practices, we have elucidated the crucial elements that enterprises should consider to maintain robust, secure, and efficient AI systems in 2025. We emphasized the importance of well-defined agent identities and stringent governance structures as foundational aspects. By assigning unique identities and implementing strict access controls, organizations can significantly mitigate risks associated with unauthorized access and data breaches.

Furthermore, the integration of zero-trust security principles ensures that AI agents operate within a secure framework that continuously verifies authenticity and access rights. This approach is complemented by micro-segmentation strategies that reduce the attack surface and limit data exposure.

Comprehensive documentation is vital, not only for maintaining operational transparency but also for facilitating audit and compliance checks. It enables developers to create a clear and accessible knowledge base, ensuring that AI systems are understood and managed efficiently. Below is an example of managing conversation history using LangChain framework:

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

Effective documentation also extends to code management. Consider this example using Pinecone for integrating a vector database to enhance AI agent capabilities:

import pinecone

pinecone.init(api_key='your-api-key')

# Further code to initialize and use the Pinecone index

For Multi-turn conversation handling, the implementation might look like this:

from langchain.conversations import MultiTurnConversation

conversation = MultiTurnConversation(

agent_executor=AgentExecutor(memory=memory)

)

As a call to action, enterprises must commit to continuous improvement in documentation practices. This involves regular updates, stakeholder feedback integration, and evolving the practices to address new challenges in an ever-changing technological landscape. Prioritizing these aspects will not only streamline operations but also support sustainable innovation, ensuring that AI deployments are both resilient and adaptive.

Appendices

This section provides additional resources to complement the main article on agent documentation practices. It includes code snippets, architecture diagrams, and implementation examples to enhance understanding and facilitate practical application.

Glossary of Terms

- AI Agent: Autonomous programs that perform tasks on behalf of a user.

- MCP Protocol: A method for managing protocol communications, particularly in multi-agent systems.

- Tool Calling: The process of invoking external tools or services from within an agent.

Code Examples and Framework Usage

Here we provide several code snippets demonstrating best practices for agent documentation:

from langchain.agents import AgentExecutor

from langchain.memory import ConversationBufferMemory

from langchain.tools import Tool

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

agent_executor = AgentExecutor(

agent=your_agent_function,

memory=memory

)

Vector Database Integration

from pinecone import PineconeClient

pinecone_client = PineconeClient(api_key="your-api-key")

index = pinecone_client.Index("example-index")

MCP Protocol Implementation Snippet

import { MCPManager } from 'crewai-protocol';

const mcpManager = new MCPManager();

mcpManager.initializeProtocol();

References and Resources

For a deeper dive into best practices in agent documentation, refer to the following resources:

- Comprehensive Guide to Agent Documentation Practices [3]

- Enterprise Security Protocols for AI Systems [7]

- Feedback Mechanisms in Multi-Agent Systems [5]

Implementation Examples

Below is an example of tool calling patterns with schemas:

const toolSchema = {

toolName: "exampleTool",

params: {

input: "string",

config: "object"

}

};

agent.callTool(toolSchema, (response) => {

console.log("Tool Response:", response);

});

Memory Management and Multi-Turn Conversation Handling

from langchain.conversation import MultiTurnConversation

conversation = MultiTurnConversation(agent_executor)

conversation.start_conversation()

Agent Orchestration Patterns

from langgraph import AgentOrchestrator

orchestrator = AgentOrchestrator()

orchestrator.orchestrate_agents()

These resources provide crucial insights and practical code implementations that support the development and documentation of robust AI agents in enterprise environments.

Frequently Asked Questions about Agent Documentation Practices

Best practices include maintaining distinct agent identity, utilizing zero-trust security, and implementing robust governance protocols. Each agent should have a unique identity, and access should be controlled through least-privilege access policies.

How can I integrate vector databases with AI agents?

Integrating vector databases like Pinecone or Weaviate enables efficient storage and retrieval of embeddings. An example in Python with LangChain:

from langchain import LangChain

from pinecone import PineconeClient

langchain = LangChain()

pinecone = PineconeClient(api_key="YOUR_API_KEY")

index = pinecone.Index(namespace="agent_memory")

langchain.set_vector_db(index)

What is the MCP protocol, and how do I implement it?

The Multi-Context Protocol (MCP) facilitates handling multiple conversational contexts. Using LangChain:

from langchain.protocols import MCP

mcp = MCP()

mcp.configure_contexts(["user_profile", "session_data"])

How do I manage memory in multi-turn conversations?

Using LangChain's memory management features allows for effective conversation handling:

from langchain.memory import ConversationBufferMemory

from langchain.agents import AgentExecutor

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

agent_executor = AgentExecutor(memory=memory)

What are some patterns for agent orchestration?

Effective orchestration involves leveraging frameworks like CrewAI or AutoGen for coordinating multiple agent tasks while ensuring security and auditability. Use service accounts and enforce IP-aware policies.

Where can I find additional support?

For more resources, check out the official documentation of LangChain, CrewAI, and Pinecone. Join community forums or repositories on GitHub for peer support and collaboration.

Example Architecture Diagram

Description: The diagram depicts a system with several AI agents connected to a vector database and an orchestration layer ensuring security and compliance through the MCP protocol.