Executive Summary and Objectives

Authoritative, audit-ready executive summary on Anthropic Claude, constitutional AI, AI regulation, and compliance deadlines. Thesis: constitutional AI design choices reshape compliance responsibilities and enforcement exposure. Audience: legal, risk, engineering, product, and C‑suite.

Global AI regulation is shifting from principles to enforcement. The EU Artificial Intelligence Act (AI Act) entered into force in 2024 with staggered obligations for prohibited, general-purpose, and high-risk systems (EUR-Lex: AI Act, Art. 5, Arts. 8–15, Annex III, penalties Art. 99, https://eur-lex.europa.eu). NIST’s AI Risk Management Framework (AI RMF 1.0) operationalizes governance and assurance across Govern, Map, Measure, Manage functions (NIST AI 100-1, Jan 2023, https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf). Local and state rules (e.g., NYC Local Law 144 on automated employment decision tools) are already being enforced (NYC DCWP, 6 RCNY 5-300, https://rules.cityofnewyork.us/rule/automated-employment-decision-tools/).

Primary thesis: constitutional AI—exemplified by Anthropic Claude’s principle-grounded training, self-critique, and reinforcement learning from AI feedback (RLAIF)—shifts compliance from post-hoc content filtering to ex ante design controls, documented principles, and auditable evaluation artifacts, thereby changing who is accountable and when (Anthropic, Constitutional AI overview, https://www.anthropic.com/news/constitutional-ai; Anthropic, Constitutional AI: Harmlessness from AI Feedback, arXiv:2212.08073, https://arxiv.org/abs/2212.08073; Anthropic Claude 3 Model Card, Mar 2024, https://www.anthropic.com/research/claude-3-model-card).

This report defines scope around Claude-enabled systems, maps obligations under the EU AI Act and NIST AI RMF, and identifies immediate actions to meet compliance deadlines over the next 12–36 months. It delivers role-specific outputs for compliance officers, legal teams, AI product managers, and C‑suite executives, with milestones, technical gap analysis, and automation opportunities grounded in primary sources (EU AI Act, NIST AI RMF, NYC DCWP rule, Colorado SB24-205, https://leg.colorado.gov/bills/sb24-205).

- Objectives and expected outcomes: 1) Classify Claude-based use cases by EU AI Act risk category and local triggers (Annex III; Art. 5) with a timeline to compliance by system and market (https://eur-lex.europa.eu).

- 2) Produce a crosswalk of constitutional AI design controls to EU AI Act Articles 8–15 and NIST AI RMF functions, highlighting technical gaps (logging, data governance, human oversight, robustness) and required evidence (https://eur-lex.europa.eu; https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf).

- 3) Build an audit-ready dossier template: constitution version history, RLAIF procedures, safety evals, red-team results, model cards, and data governance artifacts (Anthropic docs; NIST AI RMF, https://www.anthropic.com/news/constitutional-ai; https://www.anthropic.com/research/claude-3-model-card; https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf).

- 4) Define automation opportunities: policy-as-code checks against constitutional principles, automated risk logging, continuous red-teaming, and deployment gates mapped to Article 9 risk management (https://eur-lex.europa.eu).

- 5) Establish compliance KPIs and success criteria (e.g., percentage of high-risk controls with verified evidence, bias metrics within thresholds, audit turnaround time) aligned to NIST measurement guidance (https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf).

- 6) Deliver a 12–36 month roadmap with resource/RACI for legal, risk, engineering, and product, including vendor/Notified Body readiness for EU conformity assessment (EU AI Act, Title III/IV, https://eur-lex.europa.eu).

Top regulatory actions and compliance deadlines to track

| Jurisdiction | Regulatory instrument | Affected capability | Deadline (status) | Likely penalty range |

|---|---|---|---|---|

| EU | EU Artificial Intelligence Act (Art. 5 prohibitions; Art. 99 penalties) (EUR-Lex, 2024, https://eur-lex.europa.eu) | Prohibited practices (as defined in Art. 5) | Feb 2025 (enforced) | Up to 7% global annual turnover or €35m (Art. 99) |

| EU | EU AI Act – General-purpose AI (Title on GPAI obligations) (EUR-Lex, https://eur-lex.europa.eu) | GPAI providers (e.g., transparency, technical documentation, copyright policy) | Aug 2025 (adopted) | Up to 3% global turnover or €15m (Art. 99) |

| EU | EU AI Act – High-risk systems (Arts. 8–15; Annex III) (EUR-Lex, https://eur-lex.europa.eu) | Risk management, data governance, human oversight, CE marking | Aug 2026 (adopted) | Up to 3% global turnover or €15m (Art. 99) |

| United States (NYC) | NYC Local Law 144 and rules (6 RCNY 5-300) (NYC DCWP, https://rules.cityofnewyork.us/rule/automated-employment-decision-tools/) | Automated employment decision tools (AEDT) | Annual audit before use; ongoing since Jul 2023 (enforced) | $500–$1,500 per violation per day (DCWP rule) |

| United States (Colorado) | SB24-205 Consumer Protections for Artificial Intelligence (Colorado General Assembly, https://leg.colorado.gov/bills/sb24-205) | High-risk AI for consequential decisions (developer/deployer duties) | Feb 1, 2026 (adopted) | Civil penalties under Colorado Consumer Protection Act (per-violation) |

Critical milestones next 12–36 months: EU AI Act prohibitions (Feb 2025), GPAI obligations (Aug 2025), high-risk system conformity (Aug 2026). Stakeholders who must act now: compliance, legal, AI product owners, and engineering leaders (EUR-Lex; NIST AI RMF).

How to use this report

- Legal and compliance: focus on the obligations map, evidence dossier template, and the deadlines table (EU AI Act Articles; NYC LL 144; Colorado SB24-205). - Engineering and product: use the constitutional AI-to-control crosswalk and automation opportunities to implement gates and logging (Anthropic docs; NIST AI RMF). - Risk and audit: adopt the KPIs and evaluation plans to sustain continuous assurance. - Board/C‑suite: review the roadmap and penalty exposure to set resourcing and risk appetite.

- Legal/compliance: obligations mapping and artifact checklist (https://eur-lex.europa.eu; https://rules.cityofnewyork.us/rule/automated-employment-decision-tools/).

- Engineering/product: implement policy-as-code and red-teaming aligned to NIST AI RMF (https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf).

- Risk/audit: track KPIs, bias metrics, and post-market monitoring for high-risk AI (EU AI Act Arts. 61–67 equivalents; https://eur-lex.europa.eu).

- Board/C‑suite: approve resources for conformity assessment and third-party audits; set timelines and risk thresholds.

At-a-glance: AI regulation compliance deadlines

| Jurisdiction | Regulatory instrument | Affected capability | Deadline (status) | Likely penalty range |

|---|---|---|---|---|

| EU | EU AI Act (Art. 5; Art. 99) (EUR-Lex, https://eur-lex.europa.eu) | Prohibited practices | Feb 2025 (enforced) | Up to 7% global turnover or €35m |

| EU | EU AI Act – GPAI (Title on GPAI) (EUR-Lex, https://eur-lex.europa.eu) | GPAI providers (incl. Anthropic Claude) | Aug 2025 (adopted) | Up to 3% global turnover or €15m |

| EU | EU AI Act – High-risk (Arts. 8–15; Annex III) (EUR-Lex, https://eur-lex.europa.eu) | High-risk AI systems | Aug 2026 (adopted) | Up to 3% global turnover or €15m |

| United States (NYC) | NYC Local Law 144 (6 RCNY 5-300) (https://rules.cityofnewyork.us/rule/automated-employment-decision-tools/) | Automated hiring tools | Annual, pre-use (enforced) | $500–$1,500 per day/violation |

| United States (Colorado) | SB24-205 (https://leg.colorado.gov/bills/sb24-205) | High-risk consequential decisions | Feb 1, 2026 (adopted) | Civil penalties (AG enforcement) |

Methodology and evidence standards

Sources: primary legal texts and official portals (EUR-Lex for the EU AI Act; NYC DCWP rules for Local Law 144; Colorado General Assembly for SB24-205), Anthropic technical documentation (Constitutional AI overview; Claude 3 Model Card), and NIST AI RMF 1.0. Search date: Nov 9, 2025. Quantitative estimates: penalty ranges reflect statutory caps (EU AI Act Art. 99); dates computed from the AI Act’s entry-into-force and staged applicability windows; local timelines and fines taken directly from regulator rules. All claims are linked to primary sources to ensure auditability.

Recommended next steps (prioritized)

- P1 (high impact, moderate effort): Establish a Claude-specific constitution governance program with versioning, change control, and legal sign-off; map each principle to EU AI Act Articles 8–15 controls and NIST RMF functions (https://www.anthropic.com/news/constitutional-ai; https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf; https://eur-lex.europa.eu).

- P1 (high impact, low effort): Build an audit-ready evidence dossier template (model card, RLAIF procedures, safety evals, logs) and populate it for each Claude use case targeting EU and NYC deployments (https://www.anthropic.com/research/claude-3-model-card; https://rules.cityofnewyork.us/rule/automated-employment-decision-tools/).

- P2 (high impact, higher effort): Implement policy-as-code deployment gates: Article 9 risk assessment completed; bias metrics within thresholds; human oversight configured; automated red-team and trace logging enabled (https://eur-lex.europa.eu; https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf).

- P2 (moderate impact, moderate effort): Run a portfolio-wide risk classification to identify systems likely to be high-risk under Annex III and create a remediation plan and budget through Aug 2026 (https://eur-lex.europa.eu).

- P3 (targeted impact, low effort): Prepare board and executive briefings on enforcement exposure and resource needs tied to EU AI Act timelines and local rules (EUR-Lex; NYC DCWP).

Global AI Regulatory Landscape: Snapshot and Trends

A data-rich snapshot of the global AI regulation landscape highlighting the EU AI Act’s phased enforcement, diverse US sectoral enforcement, and principle-led regimes in the UK and Singapore. Quantified trend adoption, compliance deadlines, and penalty scales are compared to surface implications for Anthropic’s Constitutional AI and customer risk planning.

Global map summary: The AI regulation landscape is fragmenting into three blocs—hard-law, risk-based regimes (EU, China); principle-led, regulator-coordinated approaches (UK, Singapore, Australia); and sectoral enforcement anchored in existing statutes (US, Canada pending). Cross-border effects are pronounced due to extraterritoriality, procurement conditions, and supply-chain attestations.

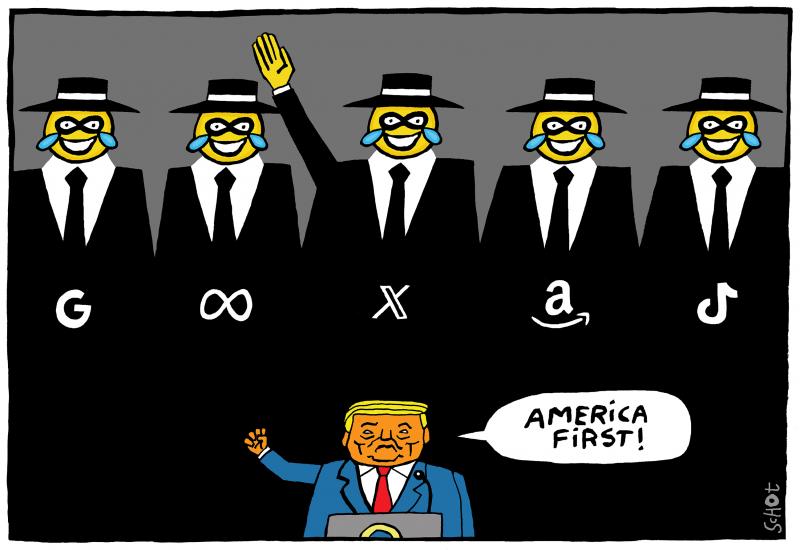

News context image (AI regulation landscape, EU AI Act, global AI rules):

The image reflects the political momentum driving compliance deadlines and enforcement posture in Europe, a key vector for global AI rules and customer expectations.

Global AI rules: jurisdiction-by-deadline and scope (compliance deadlines)

| Jurisdiction/Instrument | Legal status (Oct 2024) | Timeline to adoption | Enforcement start / key milestones | Max fines | Applicability thresholds (high-risk) | Conformity assessment | Citations |

|---|---|---|---|---|---|---|---|

| EU AI Act (Reg (EU) 2024/1689) | Enacted; entered into force Aug 2024 | 2018–2024 (proposal Apr 2021; adoption 2024) | Prohibited uses: Feb 2025; GPAI: Aug 2025; High-risk: phased 2026–2027 | Up to 35m or 7% global turnover; 15m or 3% for other breaches | Annex III high-risk uses; systemic GPAI threshold by training compute (≈1e25 FLOPs) | QMS, technical documentation, CE marking; notified body for certain high-risk | EUR-Lex 2024/1689; EU Council/Parliament 2024 factsheets |

| US federal (EO 14110; OMB M-24-10; NIST AI RMF; FTC Act) | Guidance/EO; enforcement via existing laws | 2023–2024 | EO 14110 timelines through 2024; OMB agency implementation 2024–2025; FTC enforcement ongoing | No AI-specific cap; penalties under FTC/CFPB/sectoral statutes | Federal procurement and mission AI; sectoral thresholds (FDA, banking, employment) | RMF-based risk controls; red-teaming; sectoral conformity (e.g., FDA premarket) | EO 14110 (Oct 2023); OMB M-24-10 (Mar 2024); NIST AI RMF 1.0 (Jan 2023); FTC AI guidance 2023 |

| US state: Colorado AI Act (SB 205, 2024) | Enacted | 2024 | Effective Feb 1, 2026 (duties for developers/deployers of high-risk AI) | AG enforcement under Colorado Consumer Protection Act | High-risk automated decision systems with significant risk of discrimination | Risk management program; impact assessment; notices; opt-out/appeal mechanisms | Colorado SB 205 (2024); CO AG policy updates 2024 |

| UK: Pro-innovation AI regulation (DSIT White Paper 2023; 2024 Response) | Principles-based; non-statutory; sector-led | 2023–2024 | Ongoing via ICO, CMA, FCA, MHRA; DRCF sandboxes 2024–2025 | Via existing laws (UK GDPR: up to 17.5m or 4% global turnover) | Risk-based application by sector regulators; no single high-risk list | Guidance, sandboxes; audits under sector regimes | DSIT 2023 White Paper; DSIT 2024 Response; ICO AI Guidance 2023; CMA foundation models updates 2023–2024 |

| Canada: AIDA (Bill C-27, Part 3) | Proposed; in committee (2024) | 2022–2024 | TBD post-royal assent and regulations (earliest 2025–2026) | Proposed: up to $10m or 3% admin; up to $25m or 5% criminal | High-impact systems (to be defined by regulation) | Risk management program; records; incident reporting; possible third-party audits | Bill C-27 (AIDA) text; ISED backgrounders 2023–2024 |

| Australia: Safe and Responsible AI (Interim Response 2024) | Policy development; no omnibus AI law | 2023–2024 | TBD; near-term guardrails for high-risk use cases under consideration | Privacy Act penalties (amended 2022): greater of $50m, 3x benefit, or 30% turnover | Targeted mandatory safeguards for high-risk (consultation stage) | Co-regulatory codes; standards alignment (NIST/ISO) | DITRDCA Interim Response (Jan 2024); AGD Privacy Act Review (2023) |

| Singapore: Model AI Governance (GenAI, 2024) + PDPA | Voluntary AI frameworks; binding PDPA | 2019–2024 | PDPA enforcement ongoing; AI Verify program available | PDPA: up to 10% SG turnover (or up to SGD 1m) | No fixed high-risk list; risk-based governance encouraged | AI Verify testing/assurance; PDPC accountability and DPIAs | PDPC decisions 2020–2024; AI Verify (2022–2024); GenAI discussion (2024) |

| China: Generative AI Interim Measures (2023); Algorithmic Recommendation Provisions (2022); PIPL (2021) | Enacted | 2021–2023 | Algorithm filing 2022–; GenAI measures effective Aug 15, 2023 | PIPL: up to 50m yuan or 5% of turnover; other measures add sanctions | Public-facing GenAI and influential algorithms require filing and assessments | Algorithm record-filing; security assessments; content labeling/watermarking | CAC 2022, 2023; PIPL 2021; State Council/MIIT notices 2022–2023 |

Trend adoption heatmap across regions (AI regulation landscape)

| Trend | Enacted (count of 8 regions) | Proposed (count) | Voluntary/Guidance (count) | Regions with enacted | Notes & citations |

|---|---|---|---|---|---|

| Mandatory AI risk assessments/impact assessments | 3 | 2 | 3 | EU, China, US-CO | EU AI Act Arts. 9–10; China GenAI Arts. 7–10; Colorado SB205 Sec. 6; Canada AIDA proposed; UK/Singapore voluntary |

| Explainability/transparency to users | 3 | 2 | 3 | EU, China, NYC AEDT | EU AI Act transparency incl. deepfake labels (Arts. 50–52); China labeling; NYC Local Law 144 notices; Canada/Australia proposals; UK/Singapore guidance |

| Human oversight mandates | 2 | 1 | 5 | EU, China | EU AI Act Art. 14; China GenAI requires effective human oversight; Canada AIDA proposed; UK/US/Singapore/Australia guidance |

| Provenance/data lineage and content authenticity | 2 | 2 | 4 | EU, China | EU AI Act GPAI documentation and deepfake labeling; China watermarking; Canada/Australia proposals; UK/Singapore/US guidance standards (e.g., C2PA) |

| Pre-deployment conformity/third-party assessment | 2 | 2 | 4 | EU, China | EU CE conformity assessment; China algorithm filing/security assessment; Canada/Australia proposals; UK/Singapore/US use sandboxes, RMF |

| Incident reporting and post-market monitoring | 3 | 1 | 4 | EU, China, FDA (US sectoral) | EU AI Act serious incident reporting (Art. 62); China safety incident duties; FDA postmarket for SaMD; Canada AIDA proposed |

Sample penalty scale comparison (global AI rules)

| Jurisdiction | Max administrative fine | Criminal penalties | Applies to | Citation |

|---|---|---|---|---|

| EU AI Act | Up to 35m or 7% global turnover (prohibited uses); 15m or 3% other breaches | No AI Act-specific criminal provisions | Providers, deployers, importers, distributors, notified bodies | Reg (EU) 2024/1689, Title XII |

| China (PIPL; GenAI/Algorithm measures) | Up to 50m yuan or 5% prior-year turnover; additional rectification orders | Possible criminal liability under PRC law for severe violations | Personal data handlers; public GenAI and recommendation services | PIPL 2021 Arts. 66–67; CAC 2022/2023 |

| UK (UK GDPR under DPA 2018) | Up to 17.5m or 4% global turnover | Criminal offenses for certain data crimes (e.g., unlawful obtaining) | Controllers/processors; AI uses involving personal data | ICO; DPA 2018; UK GDPR Art. 83 |

| US (FTC Act, sectoral) | Case-dependent (injunctions, disgorgement, civil penalties via specific statutes) | Potential criminal via DOJ for related offenses | Unfair/deceptive AI claims, data misuse, discrimination (sectoral) | FTC AI guidance and cases 2022–2024 (e.g., Rite Aid, Amazon Ring, BetterHelp) |

| Canada (AIDA, proposed) | Up to $10m or 3% (administrative) as proposed | Up to $25m or 5% for egregious offenses (proposed) | High-impact AI providers/deployers | Bill C-27 (AIDA), 2022–2024 |

| Singapore (PDPA) | Up to 10% of annual SG turnover (or up to SGD 1m) | Criminal penalties for certain PDPA offenses | Organizations handling personal data in AI systems | PDPC; PDPA amendments 2020–2022 |

| NYC Local Law 144 (AEDT) | $500–$1,500 per violation (per day/instance) | No criminal penalties | Employers using AEDT in hiring/promotions | NYC Rules, effective July 2023 |

Comparative instruments with citations (EU AI Act, UK, US sectoral, OECD/UN) — AI regulation landscape

| Instrument | Scope and status | Key requirements (Art./Ref.) | Enforcement model | Relevance for foundation models | Citations |

|---|---|---|---|---|---|

| EU AI Act | Horizontal regulation; enacted 2024; phased application | Risk management (Art. 9), data governance (Art. 10), human oversight (Art. 14), transparency (Arts. 50–52), GPAI duties incl. systemic GPAI | Market surveillance authorities; penalties up to 7% turnover | Direct obligations on GPAI, systemic risk tier, documentation and risk mitigation | EUR-Lex Reg (EU) 2024/1689; EC factsheets 2024 |

| UK AI White Paper (2023) + 2024 Response | Non-statutory principles; regulator-led implementation | Safety, security, transparency, fairness, accountability, contestability | ICO, CMA, FCA, MHRA, DRCF sandboxes; guidance-led | Focus on assurance, evaluations, and sector guidance for frontier models | DSIT 2023; DSIT 2024 Response; AISI publications 2023–2024 |

| US FTC, FDA, SEC (sectoral) | Existing laws applied to AI; guidance and enforcement | Truthfulness and substantiation of AI claims (FTC); SaMD premarket/monitoring (FDA); conflicts of interest proposals (SEC) | Agency enforcement actions; consent orders; civil penalties | Model claims, safety and effectiveness evidence, governance documentation | FTC AI guidance 2022–2024; FDA SaMD guidances; SEC 2023 proposals |

| OECD AI Principles (2019) / OECD.AI | Soft-law principles adopted by 40+ countries | Human-centered values, transparency, robustness, accountability | Voluntary; peer learning; policy observatory | Alignment benchmark for corporate AI governance programs | OECD.AI (2019–2024) |

| UNESCO Recommendation on AI Ethics (2021) | Intergovernmental standard-setting; non-binding | Risk assessment, explainability, data governance, oversight | Voluntary state implementation; capacity building | Supports governance norms for model training and deployment | UNESCO 2021 |

| UN General Assembly AI Resolution (2024) | Global consensus resolution on safe, secure, trustworthy AI | Calls for risk management, transparency, human rights safeguards | Non-binding; signals direction for national frameworks | Signals expectations for foundation model safety and transparency | UNGA 2024 A/RES on AI (Mar 2024) |

Front-loaded deadlines: EU prohibitions apply by Feb 2025; GPAI duties by Aug 2025; Colorado AI Act effective Feb 2026. Customers will demand attestations before statutory dates.

Constitutional AI practices (documented principles, red-teaming, bias/risk assessments, human-in-the-loop checks, data lineage notes) map directly to EU AI Act QMS, transparency, and oversight duties.

Quantified regional comparisons and compliance deadlines (AI regulation landscape)

EU and China set hard-law baselines with extraterritorial effects; the US remains enforcement-led via existing authorities; the UK, Singapore, and Australia emphasize principles and assurance. Canada’s AIDA is pending but likely to crystallize high-impact duties in 2025–2026.

Anthropic and customers should treat EU AI Act timelines as the global bar for documentation, risk management, and transparency, with Colorado and NYC as US early movers.

Trend-adoption heatmap and momentum (global AI rules)

Across eight focal jurisdictions, 2–3 core trends are already enacted (risk assessments, transparency, incident reporting) and 3–4 are emerging via proposals or guidance (human oversight granularity, provenance, third-party assurance). Momentum is strongest where procurement and market access hinge on attestations.

Implications for Anthropic’s Constitutional AI

Operational alignment: Constitutional AI’s explicit principles, critique and red-team workflows, and oversight-by-design can be mapped to EU AI Act Arts. 9–14 and transparency duties, while also satisfying US regulator expectations for substantiation and safety evidence.

- Jurisdictions most likely to impact operations/customers: EU (AI Act, extraterritorial), US (FTC/FDA/SEC and state laws incl. Colorado, NYC), UK (ICO/CMA guidance shaping enterprise procurement), China (market access via algorithm filing and GenAI measures), Singapore (PDPA and assurance expectations), Canada (AIDA incoming).

- Highest compliance cost trends: pre-deployment conformity assessment and documentation (EU CE/QMS), provenance/content authenticity and data lineage, mandatory risk/impact assessments with incident reporting, and human oversight design with auditability.

Research directions: enforcement tracking and datasets

Prioritize capturing dates, legal bases, fine amounts, remedial actions, and whether cases reference AI or algorithmic systems explicitly.

- Build a weekly crawler across: EUR-Lex (EU AI Act consolidated text, delegated acts), Official Journal, and EU market surveillance notices.

- Monitor US Federal Register and Regulations.gov for FTC, CFPB, EEOC, FDA, SEC AI-related rulemaking and guidance; scrape FTC enforcement press releases and blog posts.

- Track UK ICO enforcement logs, CMA foundation model updates, FCA/MHRA AI-related notices; DRCF sandbox outputs.

- Follow Canada Parliament Bill C-27 tracker; OPC findings; future AIDA regulations.

- Scan China CAC algorithm filing database and notices; MIIT/CAC enforcement bulletins; translations via official gazettes.

- Consult Singapore PDPC decisions database; AI Verify Foundation releases.

- Aggregate adjacent enforcement: data breaches (DPA/PDPA/GDPR), algorithmic discrimination (EEOC/AGs), biometric statutes (BIPA) to quantify AI-adjacent penalties.

- Useful databases: EUR-Lex; Federal Register; Regulations.gov; FTC Cases and Proceedings; ICO enforcement; OECD.AI Policy Observatory; PDPC decisions; UN/OHCHR databases.

Anthropic Claude and Constitutional AI: Regulatory Implications

Technical-legal deep-dive linking Anthropic Claude’s Constitutional AI to EU AI Act obligations, with a risk matrix, quantitative compliance effort, and a mapping of model governance features to legal requirements.

Constitutional AI (CAI) trains models to self-critique and revise outputs against an explicit set of principles (a constitution), combining supervised learning with Reinforcement Learning from AI Feedback (RLAIF) and safety-oriented red-teaming. Anthropic reports that Claude applies policy layers to refuse harmful requests and explain refusals; feedback loops refine the model with AI-graded preferences rather than solely human labels. See Anthropic: Bai et al., Constitutional AI: Harmlessness from AI Feedback (2022); Anthropic Claude documentation and system cards (2023–2024); Collective Constitutional AI study (2023).

Regulatory alignment: CAI’s explicit policy stack, refusal mechanisms, and evaluation harnesses map directly to EU AI Act requirements for high-risk systems: risk management (Art. 9), data and data governance (Art. 10), technical documentation (Art. 11 and Annex IV), transparency (Art. 13), human oversight (Art. 14), and accuracy/robustness/cybersecurity (Art. 15), plus post-market monitoring and incident reporting. CAI can operationalize prohibited-output filters and trace the rationale for refusals, but providers and deployers remain legally responsible for compliance and for demonstrating conformity via documentation and audit trails.

Mapping of Constitutional AI features to EU AI Act obligations

| Constitutional AI feature | Technical mechanism | EU AI Act obligation | Evidence required for conformity |

|---|---|---|---|

| Policy/constitution layer | Explicit normative rules guiding responses/refusals | Art. 13, Art. 15; Annex IV technical documentation | Versioned constitution, change logs, refusal policies |

| RLAIF preference training | AI-graded preferences and self-critique | Art. 9 risk management; Art. 10 data governance | Methodology, evaluator model specs, bias/safety eval results |

| Safety guardrails at inference | Toxicity/jailbreak filters and refusals with explanations | Prohibited outputs rules; Art. 15 robustness | Filter configs, false positive/negative rates, red-team evidence |

| Model editing/policy updates | Continuous fine-tuning and gated release process | Change management; post-market monitoring | Release notes, impact assessments, rollback procedures |

| Red-team feedback loops | Internal/adversarial testing with coverage metrics | Art. 9 risk management; incident reporting | Test plans, attack corpora, findings and mitigations |

| Logging and traceability | Prompt/response/principle-rationale logging | Art. 11 documentation; Annex IV traceability | Audit-ready logs, data lineage, decision trails |

| Human-in-the-loop escalation | SOPs to defer or override automated outputs | Art. 14 human oversight | Oversight design, training records, escalation statistics |

| Evaluation harness and CI gates | Automated safety/quality regression tests | Art. 15 accuracy/robustness | Benchmark results, failure analyses, acceptance thresholds |

Constitutional AI can streamline documentation and controls, but it does not replace EU AI Act conformity obligations for providers or deployers.

Cross-border data flows and telemetry logs may transfer personal or sensitive data; ensure GDPR lawful bases, SCCs, and data localization where required.

Technical summary: Anthropic Claude, Constitutional AI, and model governance

CAI comprises policy-layered prompting, self-critique, and revision; supervised fine-tuning on policy-aligned samples; and RLAIF where a critic model grades outputs against the constitution before reinforcement learning. Anthropic reports systematic red-teaming and refusal rationales to improve transparency and reduce harmful outputs. Key sources: Bai et al. (2022), Anthropic Claude system cards and safety docs (2023–2024), and the Collective Constitutional AI study (2023). Peer analyses note CAI’s efficiency vs. RLHF and its clearer auditability due to explicit principles.

Compliance linkage: policy layers operationalize prohibited-content rules; RLAIF and red-teams serve Art. 9 risk management; refusal rationales and logging address Art. 11/Annex IV traceability and Art. 13 transparency; oversight hooks satisfy Art. 14; and evaluations/CI enforce Art. 15 robustness.

Vulnerability matrix and remediation (constitutional AI compliance)

- Undocumented constitution changes — Severity: High — Remediation: formal change control, semantic versioning, impact notes, rollback.

- Insufficient prompt/decision logging — Severity: High — Remediation: centralized, immutable logs with 6–24 months retention and access controls.

- Dataset provenance gaps (pretraining/finetune) — Severity: High — Remediation: data lineage, licenses, bias audits, data minimization per Art. 10.

- Emergent harmful behaviors/jailbreaks — Severity: High — Remediation: adversarial eval suites, canary prompts, rate limiting, layered filters.

- Inadequate human oversight — Severity: Medium — Remediation: documented SOPs, thresholds for intervention, staff training (Art. 14).

- Explainability shortfall — Severity: Medium — Remediation: store principle applied, refusal rationale, link to policy clause; user-facing summaries.

- Monitoring drift and regression — Severity: Medium — Remediation: CI safety gates, canary deploys, post-market monitoring with KPIs.

- Third-party component risk — Severity: Medium — Remediation: DPAs, SLAs, security reviews, SBOMs, vendor kill-switches.

- Cross-border telemetry risk — Severity: Medium — Remediation: DPIAs, SCCs, regional logging, PII redaction.

- Incident response gaps — Severity: Medium — Remediation: playbooks, 72-hour reporting timers, tabletop exercises.

Quantitative assessment: resourcing and timeline for EU high-risk compliance

Controls: expect 12–18 incremental controls spanning risk management (threat modeling, adversarial evals), data governance (provenance, minimization), documentation (Annex IV pack), oversight (SOPs), and post-market monitoring.

Headcount: 2–3 FTE for 3–6 months to stand up controls (compliance lead, ML safety engineer, MLOps/observability), then 1–2 FTE ongoing.

Tooling costs (illustrative, using public benchmarks): logging/monitoring $20k–$80k per year at moderate scale (e.g., AWS CloudWatch Logs list price around $0.50 per GB ingested plus retention); safety filtering/eval stack $50k–$150k per year (mix of open-source plus enterprise eval platforms); policy/version control and audit workflow $10k–$40k per year (GRC/workflow tools). Notified body audit and conformity: $25k–$60k initial based on typical notified body day rates of roughly $1k–$2k per day over 15–30 audit days (e.g., TUV/BSI public rate cards).

Timeline: 12–16 weeks to reach audit-ready maturity for one high-risk system if prerequisites exist (data lineage, CI/CD), 20–24 weeks if building monitoring and eval infrastructure from scratch.

Direct answers: liability and documentation impact in constitutional AI compliance

Does CAI reduce exposure or reassign liability? CAI reduces expected harmful outputs via explicit principles and guardrails, which can lower incident risk and demonstrate due diligence. However, it does not reassign liability: providers and deployers remain accountable under the EU AI Act for conformity, documentation, and post-market monitoring.

How does policy layering affect model cards and conformity? Policy layers increase the need to disclose the constitution, refusal logic, and update history in model cards and Annex IV technical documentation. Each policy or model update should trigger re-evaluation, recorded testing, and versioned documentation to support conformity assessment and audits.

Major Regulatory Frameworks: EU, US, UK, and Key Others

Framework-by-framework analysis of how the EU AI Act, US FTC/SEC/FDA and state rules, UK ICO AI guidance, and Canada, Singapore, China regimes apply to Anthropic Claude and constitutional AI systems. Includes clause-level citations, conformity assessment routes, enforcement mechanisms, and a crosswalk on explainability, human oversight, data governance, incident reporting, and transparency. SEO: EU AI Act, FTC AI guidance, ICO AI guidance, conformity assessment.

This concise briefing maps core legal bases, scope, classification thresholds, documentation, conformity assessment, supervisory authorities, enforcement, penalties, and recent actions across major AI regimes. It focuses on obligations relevant to Anthropic Claude and constitutional AI, highlighting pre-market versus post-market duties and cross-border triggers.

Crosswalk: Convergence and Divergence Across AI Frameworks

| Framework | Pre-market vs post-market | Explainability | Human oversight | Data governance | Incident reporting | Transparency to users | Cross-border triggers |

|---|---|---|---|---|---|---|---|

| EU AI Act | Pre-market for high-risk (Art. 43) + post-market monitoring | Required for high-risk (Arts. 13, Annex IV) | Required (Art. 14) | Strong (Art. 10; Annex IV) | Serious incidents to authorities (Arts. 61-62) | User info and CE marking (Arts. 13, 49) | Applies if system placed on EU market or output used in EU by provider/importer |

| US (FTC/SEC/FDA + states) | Primarily post-market; some pre-deploy at state level (e.g., CO SB24-205, NYC LL144) | Material, non-misleading claims; documentation for audits | Risk-based; human-in-the-loop where material impacts | UDAP, sectoral rules; NIST AI RMF alignment | Breach/disclosure under sector laws; bias audit disclosures (NYC) | Truthful claims; notices for AEDTs and high-risk uses | Targeting US persons or operations in covered states/sectors |

| UK (pro-innovation + ICO) | Post-market with DPIAs before high-risk processing | Explainability guidance (ICO XAI) | Required proportional oversight (UK GDPR Art. 22) | Strong under UK GDPR; AI auditing guidance | Personal data breach reporting (72h) | Meaningful information about logic and effects | Processing UK residents’ personal data or UK establishment |

| Canada (AIDA draft + PIPEDA/Quebec Law 25) | AIDA proposes pre- and post-market for high-impact; current law post-market | Explainability where automated decisions affect individuals | Appropriate safeguards; human review of significant decisions | Accountability, purpose, minimization; DPIA-like under Law 25 | Breach notification; proposed AIDA incident duties | Notice of automated decisionmaking (Law 25) | Canadian operations or data; Quebec applies extraterritorially if targeting QC |

| Singapore (PDPA + Model AI Gov + AI Verify) | Primarily post-market; voluntary pre-deploy testing | Model AI requires explainability commensurate with risk | Human-in-the-loop for high-risk | PDPA purpose/consent/retention; AI guidelines 2024 | PDPC breach notification thresholds | Clear notices of AI interactions where material | PDPA applies to orgs collecting Singapore personal data |

| China (PIPL, DSL, CSL; Generative AI Measures) | Pre-release security assessment for certain services + ongoing | Explain model rules and labeling for deep synthesis | Manageable human oversight and content moderation | Strict data localization/security assessments | Report security incidents; algorithm filing | Labeling/watermarks; user rights and complaint channels | Processing China personal data or providing services domestically |

Pre-market conformity assessment is mandatory under the EU AI Act for high-risk systems; most other regimes emphasize post-market accountability with sectoral or voluntary pre-deployment checks.

EU AI Act: Legal Basis, Scope, and Conformity Assessment

Legal basis: Regulation (EU) AI Act (final text 2024). Key clauses: Art. 3 (definitions), Art. 5 (prohibited AI), Art. 6 (high-risk classification and Annex III), Arts. 9–15 (risk, data governance, technical documentation, record-keeping, transparency to users, human oversight, accuracy/robustness/cybersecurity), Arts. 16–24 (provider, deployer, importer obligations), Art. 43 (conformity assessment), Annex IV (technical documentation), Annex VI (internal control), Annex VII (QMS and notified body). Penalties: up to 35m or 7% global annual turnover for prohibited practices; 15m or 3% for other infringements; 7.5m or 1% for supplying incorrect information.

Scope and classification: Applies to providers placing AI systems on the EU market or putting into service; extraterritorial where output is used in the EU by a provider (Arts. 2–3). High-risk per Art. 6 and Annex III (e.g., employment, credit, education, critical infrastructure, law enforcement). Foundation models/GPAI have additional duties under the final Act and supervision by the European AI Office.

Documentation and conformity assessment: High-risk requires technical documentation (Annex IV), risk management (Art. 9), data governance (Art. 10), human oversight (Art. 14), user instructions (Art. 13), QMS (Art. 17), and post-market monitoring (Arts. 61–62). Conformity routes: internal control using harmonized standards (Annex VI) or third-party notified body (Annex VII) if standards are not fully applied (Art. 43). Supervisory authorities: national market surveillance authorities; notified bodies; European AI Office for GPAI. Enforcement examples: not yet under AI Act; analogous GDPR actions exist.

Citations: AI Act Arts. 3, 5–6, 9–17, 43, 49, 61–62; Annexes IV, VI, VII. Official text: eur-lex.europa.eu (Regulation on AI once codified in the OJ).

- Checklist — Mandatory: Classify (Annex III) and scope (1–2 weeks); implement RMF and QMS (3–6 weeks); compile Annex IV technical file incl. data sheets/model cards (4–12 weeks); choose conformity route and perform assessment (internal 2–4 weeks; notified body 4–12 weeks); affix CE marking and register (1–2 weeks).

- Checklist — Recommended: Map harmonized standards (e.g., ISO/IEC 42001, 23894) and common specifications (1–2 weeks); run red-team and bias/robustness testing (2–4 weeks); user-centered oversight design reviews (1–2 weeks).

- Checklist — Monitoring: Post-market monitoring plan and serious incident reporting procedures (setup 2 weeks; ongoing); periodic re-assessment after substantial changes (1–2 weeks).

GPAI/foundation models face additional transparency and risk management duties overseen by the European AI Office.

United States: FTC AI Guidance, SEC, FDA, and State Patchwork

Legal basis: FTC Act Sec. 5 (unfair/deceptive), Fair Credit Reporting Act, ECOA/Reg B, Title VII (EEOC), state privacy and AI laws. Key guidance: FTC 2021 Aiming for truth, fairness, and equity; FTC 2023 Keep your AI claims in check. SEC enforcement on AI-washing (2024). FDA SaMD AI/ML policy including PCCP draft. NIST AI RMF 1.0 supports governance.

Scope and classification: No single federal AI statute; risk-based and sectoral. State examples: Colorado SB24-205 (high-risk AI duties, effective 2025); NYC Local Law 144 (AEDT bias audits).

Documentation and assessment: Maintain training/data documentation, testing records, impact assessments where required (e.g., NYC bias audit), and truthful marketing substantiation. FDA SaMD requires premarket submissions and PCCP for learning systems.

Supervision and enforcement: FTC, SEC, FDA, CFPB, EEOC; state AGs. Enforcement examples: FTC v. Everalbum (2021) and Rite Aid FR (2023); SEC charges for AI-washing against Delphia and Global Predictions (2024).

Citations: FTC 2021 blog https://www.ftc.gov/business-guidance/blog/2021/04/aiming-truth-fairness-equity-your-companys-use-ai; FTC 2023 blog https://www.ftc.gov/business-guidance/blog/2023/02/keep-your-ai-claims-check; Everalbum order https://www.ftc.gov/news-events/news/press-releases/2021/01/ftc-requires-app-developer-delete-algorithms-or-ai-products-built-using-deceptively-obtained-facial; Rite Aid order https://www.ftc.gov/news-events/news/press-releases/2023/12/ftc-bans-rite-aid-using-facial-recognition-technology; SEC AI-washing press release https://www.sec.gov/news/press-release/2024-22; FDA AI/ML SaMD page https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-samd; PCCP draft guidance https://www.fda.gov/regulatory-information/search-fda-guidance-documents/marketing-submission-recommendations-predetermined-change-control-plan-artificial-intelligence-machine; NIST AI RMF https://www.nist.gov/itl/ai-risk-management-framework; Colorado SB24-205 https://leg.colorado.gov/bills/sb24-205; NYC LL 144 https://www.nyc.gov/site/dca/about/automated-employment-decision-tools.page

- Checklist — Mandatory: Substantiate AI claims (1 week); implement UDAP-aligned testing and documentation (2–4 weeks); NYC AEDT bias audit and notices if applicable (4–8 weeks); sectoral compliance (FCRA/ECOA/FDA) as relevant (2–8 weeks).

- Checklist — Recommended: Adopt NIST AI RMF controls and model cards/datasheets (2–4 weeks); adversarial/red-team testing (2–3 weeks).

- Checklist — Monitoring: Post-deployment drift/bias monitoring and consumer complaint handling (ongoing); keep audit logs and change control (setup 1–2 weeks; ongoing).

United Kingdom: ICO AI Guidance and Pro-Innovation Approach

Legal basis: UK GDPR and Data Protection Act 2018; government policy paper AI regulation: a pro-innovation approach (2023) and 2024 updates. ICO guidance: AI and data protection hub; AI auditing framework; Explaining decisions made with AI (XAI).

Scope and classification: Risk-based regulator-led approach; no single AI Act. Automated decisionmaking safeguards under UK GDPR Art. 22.

Documentation and assessment: DPIAs for high-risk processing; data minimization, fairness, explainability; technical and organizational measures.

Supervision and enforcement: ICO (privacy), CMA (competition), FCA/MHRA sectoral. Enforcement examples: ICO fine against Clearview AI (2022) and ongoing biometrics scrutiny.

Citations: ICO AI hub https://ico.org.uk/for-organisations/ai/; AI auditing framework https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/ai-auditing-framework/; XAI guidance https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/explaining-decisions-made-with-ai/; UK white paper https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach

- Checklist — Mandatory: DPIA and lawful basis check (1–2 weeks); implement Art. 22 safeguards and meaningful explanations (2–3 weeks); data protection by design (2–4 weeks).

- Checklist — Recommended: Align with ICO AI auditing controls and risk registers (2–3 weeks); independent bias testing (2 weeks).

- Checklist — Monitoring: Ongoing accuracy/drift checks; 72h breach reporting readiness (setup 1 week; ongoing).

Canada: AIDA (proposed), PIPEDA, and Quebec Law 25

Legal basis: Proposed Artificial Intelligence and Data Act (AIDA) in Bill C-27; current law under PIPEDA and provincial laws (e.g., Quebec Law 25 automated decisionmaking notices).

Scope and classification: AIDA targets high-impact AI systems with obligations for risk management, impact assessments, incident reporting; not yet enacted. Current obligations include accountability and transparency for automated decisions affecting individuals.

Supervision and enforcement: Office of the Privacy Commissioner (OPC) and provincial authorities. Enforcement example: OPC Tim Hortons investigation (2022) on unlawful location tracking practices.

Citations: Bill C-27 AIDA https://www.parl.ca/LegisInfo/en/bill/44-1/c-27; OPC AI hub https://www.priv.gc.ca/en/privacy-topics/artificial-intelligence/ai/; Quebec Law 25 overview https://www.cai.gouv.qc.ca/law-25/; Tim Hortons case https://www.priv.gc.ca/en/opc-actions-and-decisions/investigations/investigations-into-businesses/2022/pipeda-2022-001/

- Checklist — Mandatory: PIPEDA accountability program refresh (2–3 weeks); Law 25 automated decision notices and human review (1–2 weeks).

- Checklist — Recommended: Pre-deploy impact assessment aligned to draft AIDA (2–4 weeks); model/data documentation (1–2 weeks).

- Checklist — Monitoring: Breach response and incident logging (setup 1 week; ongoing).

Singapore: PDPA, Model AI Governance Framework, AI Verify

Legal basis: Personal Data Protection Act (PDPA), PDPC Advisory Guidelines on the Use of Personal Data in AI Systems (May 2024), Model AI Governance Framework (2.0), AI Verify testing framework.

Scope and classification: Risk-based; voluntary but widely adopted controls emphasizing explainability, human oversight, robustness, and testing.

Supervision and enforcement: PDPC; enforcement via PDPA. Guidance-driven AI controls; PDPC issues decisions for data breaches and misuse.

Citations: PDPC AI guidelines 2024 https://www.pdpc.gov.sg/help-and-resources/2024/05/advisory-guidelines-on-the-use-of-personal-data-in-ai-systems; Model AI Governance Framework https://www.pdpc.gov.sg/help-and-resources/Model-AI-Governance-Framework; AI Verify https://aiverify.sg

- Checklist — Mandatory: PDPA purpose/consent checks and DPO review (1–2 weeks); breach notification readiness (1 week).

- Checklist — Recommended: AI Verify evaluation and Model AI controls (2–4 weeks); explainability documentation for high-impact uses (1–2 weeks).

- Checklist — Monitoring: Data retention and access controls; post-deployment monitoring (ongoing).

China: PIPL, DSL/CSL, Algorithmic and Generative AI Measures

Legal basis: Personal Information Protection Law (PIPL), Data Security Law (DSL), Cybersecurity Law (CSL); Internet Information Service Algorithmic Recommendation Provisions (2022); Deep Synthesis Provisions (2022); Interim Measures for Generative AI Services (2023).

Scope and classification: Applies to providers operating in China or processing China personal data; certain services require algorithm filing and security assessments before launch.

Documentation and assessment: Algorithm filing, model cards-like disclosures, watermarking and labeling for deep synthesis; data localization/security assessments in specified cases.

Supervision and enforcement: CAC, MIIT, MPS; significant fines and orders. Enforcement example: CAC fine against Didi (2022) under data security/personal information rules.

Citations: Algorithmic Recommendation Provisions https://www.cac.gov.cn/2022-01/04/c_1642894606364259.htm; Deep Synthesis Provisions https://www.cac.gov.cn/2022-12/11/c_167222.htm; Generative AI Measures https://www.cac.gov.cn/2023-07/13/c_1690898327029107.htm; PIPL (English overview) https://www.npc.gov.cn/englishnpc/c23934/; Didi penalty notice https://www.cac.gov.cn/2022-07/21/c_165837.htm

- Checklist — Mandatory: Determine if algorithm filing/security assessment needed (1–3 weeks); implement content labeling/watermarking and user complaint channel (2–3 weeks); PIPL consent and cross-border transfer mechanisms (2–4 weeks).

- Checklist — Recommended: Localized incident response tabletop and access control hardening (1–2 weeks); red-teaming for content safety (2–3 weeks).

- Checklist — Monitoring: Periodic model update filings and risk reviews; incident reporting within required timelines (ongoing).

Generative AI providers may need pre-release security assessment and algorithm filing before public launch.

Compliance Requirements and Deadlines by Framework

Executive AI compliance calendar and requirements registry for Anthropic Claude deployments and customers, covering EU AI Act milestones (2024–2027), FTC timelines, and UK transparency rules. Includes deliverables, evidentiary standards, owners, and sample estimates to meet compliance deadlines and AI regulation calendar needs.

Use this 12–36 month calendar and registry to plan adoption dates, consultation and conformity assessment windows, reporting deadlines, and enforcement start dates for Claude-like systems. Dates for the EU AI Act are derived from the Official Journal publication of Regulation (EU) 2024/1689 (entry into force 1 Aug 2024) and its staged applicability. FTC timelines are typical practice from Section 5 investigations and consent orders, which set order-specific deadlines.

What to produce now: an EU AI Act gap analysis and inventory, prohibited use-screening, GPAI transparency work-up, and a draft high-risk technical file and QMS where applicable. Third-party involvement: EU notified bodies are required where internal control is not permitted or where sectoral safety law applies (e.g., medical), while FTC matters may require independent assessments under an order.

- Now: designate accountable executive and create a single system-of-record for AI systems, deliverables, tests, and incidents.

- Scope: Claude-based applications as GPAI and, where within Annex III use cases, as high-risk AI; also cover FTC Section 5 marketing and deception risk, and UK GDPR transparency for automated decisions.

Time-bound compliance calendar (12–36 months)

| Date | Framework | Milestone | Regulatory citation | Deliverable focus | Suggested owner(s) |

|---|---|---|---|---|---|

| 1 Aug 2024 | EU AI Act | Regulation enters into force | Regulation (EU) 2024/1689, Art. entry into force | AI inventory, gap analysis, assign accountable exec | Legal, Compliance, Product |

| 1 Feb 2025 | EU AI Act | Prohibitions apply (6 months after entry) | Art. 5 Unacceptable-risk systems | Withdraw/disable prohibited uses; written attestation and records | Legal, Product, Security |

| 1 Aug 2025 | EU AI Act | GPAI obligations apply (12 months after entry) | GPAI transparency and technical documentation (Title VIII; GPAI chapter) | Model card, training data summary, copyright measures, eval reports | ML Eng, Data, Legal |

| 1 Aug 2025 | EU AI Act | Member States designate authorities; governance standing up | Title X Governance | Regulator liaison plan; conformity assessment planning | Regulatory Affairs, Compliance |

| 1 Aug 2026 | EU AI Act | High-risk AI obligations apply (24 months after entry) | Titles II–IV; Annex III; Annex IV technical file | QMS, risk management, technical file, logs, post-market plan, CE marking | Compliance, QA/RA, ML Eng |

| 1 Aug 2027 | EU AI Act | End of extended transition for certain legacy/embedded uses (36 months) | Transitional provisions | Legacy system retrofit/retirement completed; database registration | Engineering, Regulatory Affairs |

| Typical: 20–30 days from CID | FTC | Respond to Civil Investigative Demand (rolling) | FTC Act Section 5; 15 U.S.C. 45; CID rules | Hold notice, document collection, substantiation dossier | Legal, Compliance, Data |

| 60 days post-order (then annually) | FTC | Initial compliance report; ongoing monitoring under order | Consent Order terms (case-specific) | Compliance program, audits, reporting, recordkeeping (often 20 years) | Legal, Compliance, Security |

EU AI Act dates: entry into force 1 Aug 2024; prohibitions apply 6 months later; GPAI 12 months; high-risk 24 months; selected transitional/legacy and certain biometric provisions up to 36 months. Always confirm with your national competent authority.

FTC deadlines vary by order. Treat the listed timeframes as typical, not universal. Read each CID or order for binding dates.

If you can demonstrate a maintained technical file, operating QMS, and incident SLAs aligned to EU and FTC expectations by Aug 2025, you will be on track for 2026 high-risk enforcement.

Executive calendar (how to use)

Use the calendar to sequence build-out: 1) remove prohibited uses by Feb 2025; 2) stand up GPAI transparency and documentation by Aug 2025; 3) complete high-risk conformity workflows and registration by Aug 2026; 4) close legacy gaps by Aug 2027. Maintain readiness for FTC inquiries at any time.

Deliverables registry by framework

Scope: Claude-like GPAI models and deployers interfacing with EU users.

- Regulatory citation: Regulation (EU) 2024/1689 GPAI provisions (transparency, documentation, risk mitigation).

- Scientific/technical deliverables: model card (8–12 pages), training data summary and data governance log (15–30 pages), copyrighted content safeguards description, robustness and misuse evaluation (200–500 tests; 30–50 red-team scenarios), content provenance or equivalent disclosure measures.

- Evidentiary standards: traceable evaluation protocols, reproducible test scripts, dataset lineage and license records, versioned model artifacts and change logs.

- Control objectives: transparency to end users, risk identification and mitigation for systemic/model risks, content provenance/labeling where applicable, data governance and IP compliance.

- Suggested owners: ML Engineering (cards, evals), Data Governance (dataset documentation), Legal/IP (copyright measures, notices), Product/UX (user disclosures).

EU AI Act — High-risk AI (24-month horizon)

Scope: Annex III uses (e.g., employment, education, essential services, law enforcement).

- Regulatory citation: Titles II–IV; Annex III (high-risk), Annex IV (technical documentation), conformity assessment and post-market provisions.

- Scientific/technical deliverables: technical file per Annex IV (40–80 pages) including system architecture, data specs, risk management file, human oversight plan, performance metrics; logs and traceability; robustness, accuracy, cybersecurity test results (1,000–2,000 test cases including adversarial and stress tests); post-market monitoring plan; EU database registration dossier; CE marking artifacts.

- Evidentiary standards: documented QMS, requirements-to-test traceability, statistically sound validation (confidence intervals, predefined acceptance criteria), audit-ready logs, supplier controls for third-party components.

- Control objectives: safety-by-design, data quality and representativeness, human oversight effectiveness, resilience to attacks, continuous monitoring and corrective actions, conformity marking and market surveillance readiness.

- Conformity pathway: internal control if fully aligned with harmonized standards; otherwise notified body required; where AI is a safety component under sectoral law (e.g., medical, machinery), use relevant third-party assessment.

- Suggested owners: Compliance/QA-RA (QMS, technical file), ML Engineering (testing), Security (cyber robustness), Legal/Regulatory (conformity, registration), Product (user-facing disclosures).

FTC Section 5 — unfair or deceptive acts (rolling)

Scope: U.S. marketing claims, deceptive design, privacy/security in AI features.

- Regulatory citation: FTC Act Section 5, 15 U.S.C. 45; AI claims guidance and prior orders.

- Scientific/technical deliverables: algorithmic substantiation file linking claims to tests; accuracy and robustness studies; bias/fairness analyses with sample sizes adequate to support claims (e.g., n ≥ 10,000 labeled examples where feasible); change-management and rollback procedures; dark-patterns review; vendor risk assessments.

- Evidentiary standards: competent and reliable evidence; reproducible testing; claim-to-evidence matrix; records retention typically 20 years under orders.

- Control objectives: truthful, non-misleading representations; reasonable data security; deletion/repair programs if required; incident and material change reporting per order terms.

- Suggested owners: Legal (claims, substantiation), Product Marketing (review), ML Eng (testing), Privacy/Security (controls), Compliance (order reporting).

UK transparency and automated decision-making (in force)

Scope: UK users and data subjects where AI informs or makes decisions.

- Regulatory citation: UK GDPR Arts 13–15 and 22; ICO guidance on Explaining decisions made with AI; transparency and rights to meaningful information.

- Scientific/technical deliverables: transparency notices tailored to model use (4–6 pages per product), summary of logic and factors, impact assessment (DPIA), human review and appeal procedures, records of data subject requests and responses.

- Evidentiary standards: clear, accessible explanations; documented lawful basis; DPIA with risk mitigations; proof of rights handling within timelines (1 month, extendable).

- Control objectives: inform users when interacting with AI, enable and honor rights to access, objection, and human review; maintain explainability commensurate with risk.

- Suggested owners: Privacy/Legal (DPIA, notices), Product/UX (user messaging), ML Eng (explainability artifacts), Support/Operations (rights handling).

Prioritized remediation backlog

- Immediate (0–3 months): prohibited-use screen and attestation; AI system inventory; assign accountable exec and RACI; initiate GPAI model card and data summary; stand up incident intake with 24h triage; FTC claim substantiation matrix for live marketing.

- Near-term (3–12 months): complete GPAI transparency package; copyright safeguards and provenance labeling plan; robustness and misuse test suite (≥ 200 tests) and red-teaming (≥ 30 scenarios); draft high-risk QMS and Annex IV technical file skeleton; regulator liaison plan; CID response playbook and data map; DPIAs for UK/EU deployments.

- Medium-term (12–24 months): full high-risk conformity assessment and CE marking where applicable; EU database registration; expand robustness suite to ≥ 1,000 tests; post-market monitoring dashboards; annual FTC-style compliance reporting capability; close legacy system gaps ahead of 36-month transitions.

Operational checklists

- [ ] Quality Management System documented and approved.

- [ ] Risk management plan and file completed; residual risks justified.

- [ ] Data governance: sources, licenses, quality metrics, bias tests recorded.

- [ ] Technical file (Annex IV) complete and signed; versioned in repository.

- [ ] Human oversight roles, tools, and fallback procedures validated.

- [ ] Performance targets (accuracy, robustness, cybersecurity) met with evidence.

- [ ] Logging and traceability enabled; retention schedules set.

- [ ] Post-market monitoring and serious incident reporting procedure defined.

- [ ] Conformity assessment passed; CE marking applied; EU database registration completed.

FTC unfair/deceptive expectations checklist

- [ ] Claims inventory mapped to evidence; no overstated capabilities.

- [ ] Algorithmic substantiation file with reproducible tests and error rates.

- [ ] Clear, non-deceptive disclosures; avoid dark patterns.

- [ ] Reasonable security controls for data/model artifacts documented.

- [ ] Vendor and dataset due diligence completed; contracts include compliance clauses.

- [ ] Incident and material-change reporting workflow aligned to order-like timelines.

- [ ] Recordkeeping program capable of 20-year retention.

UK transparency rules checklist

- [ ] Users informed when interacting with AI; AI-generated content labeled where appropriate.

- [ ] Purpose, logic, and key factors explained in plain language.

- [ ] DPIA completed; lawful basis documented.

- [ ] Human review available for significant decisions; appeal path documented.

- [ ] Rights requests handled within 1 month; audit trail kept.

Ownership, SLAs, and evidentiary standards

Set measurable thresholds so audits and investigations can be satisfied quickly.

- Incident reporting SLAs: internal triage within 24 hours; EU serious incident external reporting without undue delay and no later than 15 days after awareness; FTC order-driven incident reports typically 10–30 days (check order). UK/EU data breach reporting: 72 hours to authority where applicable.

- Documentation estimates: Technical file 40–80 pages; dataset documentation 15–30 pages; model card 8–12 pages; post-market monitoring plan 6–10 pages; conformity dossier index 2–4 pages.

- Testing estimates: robustness and security suite 1,000–2,000 test cases for high-risk; GPAI baseline 200–500; red-team 30–100 scenarios; bias evaluation n ≥ 10,000 labeled examples per salient subgroup when feasible.

- Ownership: Legal (citations, notices, contracts), Compliance/QA-RA (QMS, files, audits), ML Engineering (evals, robustness, explainability), Security (threat modeling, model hardening), Product/UX (user disclosures), Data Governance (lineage, licensing).

Obligations requiring legal or third-party certification

Plan for external involvement early where mandated or pragmatic.

- EU AI Act high-risk: notified body required if internal control path not available (e.g., absence of harmonized standards) or when sectoral safety law applies (medical, machinery).

- EU registrations: high-risk systems must be registered in the EU database before placement on the market or service start.

- FTC: independent assessments, deletion, and audits can be mandated under consent orders; deadlines and scope are order-specific.

- UK: no third-party certification required by default; ICO may investigate and enforce transparency and rights compliance.

References

EU: Regulation (EU) 2024/1689 (AI Act) as published in the Official Journal; Articles on prohibitions, GPAI transparency, high-risk obligations, conformity assessment, governance, and post-market monitoring define the 6/12/24/36-month stages.

US: FTC Act Section 5 and recent AI-related cases and blog guidance outline substantiation and deception expectations; consent orders set binding reporting, auditing, and recordkeeping timelines.

UK: UK GDPR Articles 13–15 and 22, and ICO guidance on explaining decisions made with AI specify transparency and rights requirements.

Governance, Oversight, and Accountability Models

Objective comparison of AI governance architectures with RACI AI compliance matrices, templates, KPIs, resource and budget estimates, and accountability mapping for Anthropic Claude users vs. Anthropic as provider, aligned to EU and US expectations.

Robust AI governance converts regulatory obligations into day-to-day controls. This section compares three architectures—centralized compliance (legal-led), federated (product engineering-led with centralized oversight), and hybrid (governance board plus embedded compliance champions)—and provides RACI matrices, artifacts, KPIs, and resourcing benchmarks. The aim is to minimize regulatory exposure and operational friction while aligning to EU AI Act, NIST AI RMF, and ISO/IEC 42001 expectations for AI governance, oversight, and accountability.

At a glance: centralized models reduce interpretive variance but can slow delivery; federated models scale with product velocity but risk inconsistency; hybrid models balance consistency with agility by coupling a governance board and embedded champions.

Primary references: EU AI Act (2024 final text), NIST AI Risk Management Framework 1.0, ISO/IEC 42001:2023 AI Management System, ISO/IEC 23894:2023 AI risk management, GDPR and EDPB guidance for incident reporting, FTC unfair/deceptive practices, OCC SR 11-7 model risk management (financial services).

Comparative Governance Architectures

Centralized compliance (legal-led): A single compliance and legal function writes policy, approves deployments, and controls change. Strengths: consistent interpretations, auditable decisions, fast regulator interface. Risks: bottlenecks, limited product context, change inertia. Best for: regulated sectors with high-risk use cases and low product concurrency.

Federated model (product engineering-led with centralized oversight): Product squads own risk assessment, change control, and incident response with a light-touch central policy office. Strengths: speed, domain fit, ownership at the edge. Risks: policy drift, uneven documentation, variable audit readiness. Best for: diversified product portfolios and fast iteration cycles where risks are mostly medium.

Hybrid model (governance board plus embedded compliance champions): An AI Governance Board sets policy, thresholds, and KPIs; each product area has an embedded Responsible AI Champion. Strengths: balanced control and speed, clear escalation paths, stronger audit trails. Risks: requires disciplined operating cadence and trained champions. Best for: firms needing EU AI Act alignment with product agility.

Which minimizes exposure and friction? In most organizations, the hybrid model yields the lowest combined regulatory exposure and operational friction: centralized standards, risk thresholds, and reporting; decentralized execution with embedded expertise; clear escalation SLAs; and documented segregation of duties.

Roles and Definitions

- Board/Audit and Risk Committee (ARC): ultimate oversight of AI risk.

- AI Governance Board (AIGB): cross-functional body setting policy and risk thresholds.

- Chief Compliance Officer (CCO): policy owner and regulator interface.

- Chief Legal Counsel (CLC): legal interpretation and liability management.

- Responsible AI Lead (RAIL): operationalizes AI risk controls and assurance.

- Chief Product Officer (CPO): product accountability and prioritization.

- Head of AI/ML (HAI): model lifecycle ownership and technical quality.

- Chief Information Security Officer (CISO): security and incident command.

- Data Protection Officer (DPO): privacy, DPIA, and data rights.

- Enterprise Risk Management (ERM): risk methodology and aggregation.

- Vendor Risk Management (VRM): third-party diligence and monitoring.

- Internal Audit (IA): independent testing of controls.

- Communications/PR (Comms): external statements and crisis comms.

- Incident Response Lead/SOC (IRL/SOC): detection, triage, containment.

- Product Owner (PO): feature-level decisions and change control.

RACI Matrices by Model

| Activity | Responsible | Accountable | Consulted | Informed |

|---|---|---|---|---|

| Policy management | CCO, CLC | ARC | RAIL, CISO, DPO | CPO, HAI, IA |

| Risk assessment | RAIL, ERM | CCO | DPO, CISO, CLC, HAI | ARC, PO |

| Change control | PO, HAI | CCO | CISO, CLC, RAIL | ARC, IA |

| Incident reporting | IRL/SOC | CISO | CCO, CLC, DPO | ARC, Comms, Customers |

| Third-party risk management | VRM | CCO | CISO, DPO, CLC | ARC, IA |

| External communications | Comms | CLC | CCO, CISO | ARC, Regulators |

Federated Model (Product-led with Central Oversight) RACI

| Activity | Responsible | Accountable | Consulted | Informed |

|---|---|---|---|---|

| Policy management | RAIL | CPO | CCO, CLC, CISO | ARC, IA |

| Risk assessment | PO, HAI | CPO | RAIL, DPO, CISO | CCO, ARC |

| Change control | PO | CPO | RAIL, CISO, CLC | CCO, IA |

| Incident reporting | IRL/SOC, PO | CISO | CLC, CCO, DPO | ARC, Comms |

| Third-party risk management | VRM, PO | CPO | CCO, CISO, DPO | ARC |

| External communications | Comms | CPO | CLC, CCO | ARC, Regulators |

Hybrid Model (Governance Board + Embedded Champions) RACI

| Activity | Responsible | Accountable | Consulted | Informed |

|---|---|---|---|---|

| Policy management | RAIL, CCO | AIGB | CLC, CISO, DPO | ARC, Product Councils |

| Risk assessment | PO, HAI, RAIL | CPO | CCO, DPO, CISO, ERM | AIGB, ARC |

| Change control | PO, RAIL | CPO | CCO, CISO, CLC | AIGB, IA |

| Incident reporting | IRL/SOC | CISO | CLC, CCO, DPO, RAIL | AIGB, ARC, Comms |

| Third-party risk management | VRM, RAIL | CCO | CISO, DPO, CLC, PO | AIGB, ARC |

| External communications | Comms | CLC | CCO, CISO, CPO | AIGB, ARC, Regulators |

Governance Artifacts

| Field | Description |

|---|---|

| System and use case | Name, purpose, and business owner |

| Risk tier | EU AI Act risk class and internal criticality |

| Data and lawful basis | Data categories, sources, DPIA link |

| Model and training provenance | Model type, datasets, licenses |

| Evaluation and red teaming | Metrics, safety tests, residual risks |

| Controls and mitigations | HITL, guardrails, monitoring |

| Go/no-go decision | Approver, conditions, review date |

| Mappings | NIST AI RMF, ISO/IEC 42001 controls |

Policy-Change Log Format

| Date | Policy | Change summary | Risk impact | Approver | Effective date | Version | Link | Status |

|---|

Required Audit Trails

| Process | Required evidence | Retention | Owner |

|---|---|---|---|

| Model lifecycle | Versioned code, data lineage, eval results | 7 years (regulated) or per policy | HAI |

| Risk and DPIA | Risk templates, approvals, mappings | 5–7 years | CCO, DPO |

| Change control | CAB tickets, approvals, rollback plans | 3–5 years | PO |

| Incidents | Triage logs, timelines, notifications | 7 years | CISO |

| Third-party | DDQs, contracts, test attestations | Lifecycle + 5 years | VRM |

| Board reporting | KPIs, minutes, decisions | 7 years | AIGB, ARC |

Board-level Reporting KPIs

| KPI | Definition | Target | Cadence |

|---|---|---|---|

| High-risk deployments | Count and % with complete risk assessments | 100% assessed pre-deploy | Monthly |

| Unresolved incidents >30 days | Open safety/privacy incidents older than 30 days | 0 | Monthly |

| MTTD/MTTR for AI incidents | Mean time to detect/remediate safety issues | MTTD <24h, MTTR <10 days | Monthly |

| External inquiries | Regulator/customer inquiries and response SLAs | 100% within 10 business days | Quarterly |

| Third-party coverage | % AI vendors with completed risk reviews | 100% pre-contract | Quarterly |

| Policy exceptions | Approved deviations with compensating controls | <=2 active; review monthly | Monthly |

| Red teaming coverage | % high-risk systems tested last 12 months | 100% | Quarterly |

Resource and Budget Benchmarks

Estimates assume 5–20 concurrent AI initiatives (startup to enterprise), adjusted for risk profile. Tooling includes GRC/workflow, model registry, data lineage, evaluation and red teaming platforms, logging/observability, and incident management.

Team Size (FTE) by Organization Scale and Model

| Org size | Centralized | Federated | Hybrid |

|---|---|---|---|

| Startup (≤250 staff) | 4–6 | 6–8 | 7–9 |

| Scale-up (250–2,000) | 8–12 | 12–18 | 15–22 |

| Enterprise (≥2,000) | 25–40 | 35–60 | 45–80 |

Estimated Annual Compliance Tooling Budget

| Org size | Budget range |

|---|---|

| Startup | $50k–$150k |

| Scale-up | $250k–$750k |

| Enterprise | $1M–$5M |

Investigation SLA Targets

| Activity | Target |

|---|---|

| Initial triage | <24 hours (startup up to 48h) |

| Containment | <72 hours |

| Root-cause analysis | <10 business days |

| Regulatory notification | As required (e.g., EU AI Act serious incident within 15 days; GDPR personal data breach within 72 hours) |

| Customer disclosure | Within 5 business days after facts stabilized |

Accountability Mapping: Anthropic vs. Customers

Anthropic (provider) is responsible for model development practices, documented safety guardrails, transparency artifacts, security of the service, and lawful provisioning. Customers are responsible for context-specific use, prompt design, domain validation and human oversight, deployment controls, and assessing downstream harms in their environment. Some obligations are shared, such as misuse monitoring and incident cooperation, consistent with provider terms and applicable law.

Responsibility Split

| Area | Anthropic (provider) | Customer (user) |

|---|---|---|

| Model safety guardrails | Design, documentation, default safety filters, updates | Configure additional safeguards for use case |

| Content outputs | General safety mechanisms and platform policies | Use-case validation, factual verification, acceptance criteria |

| Downstream harms | Monitor systemic risks, cooperate on incidents, terms of use | Risk assessment, HITL, remediation, user harm response |

| Data governance | Service security and privacy controls | Lawful basis, DPIA, data minimization, retention |

| Red teaming and evaluation | Publish safety evaluations where applicable | Contextual red teaming, bias/impact testing |

| Incident communication | Notify per terms and law; coordinate with customers | Notify regulators/customers as required for deployment |

Regulatory Alignment (EU and US)

- EU AI Act: implement an AI risk management system, technical documentation, data governance, human oversight, post-market monitoring, serious incident reporting within mandated timelines, and conformity assessment for high-risk systems.

- NIST AI RMF 1.0: govern, map, measure, and manage risks; align KPIs and controls with RMF functions; document risk acceptance.

- ISO/IEC 42001: establish an AI management system; integrate with ISO 27001 where applicable; use internal audits and continuous improvement.

- Sectoral overlays: GDPR for personal data processing and 72-hour breach notices; OCC SR 11-7 for model risk in finance; FTC unfair/deceptive practices for marketing and claims.

- Board oversight: maintain AIGB or ARC with regular KPI reviews, approve risk thresholds, and document decisions and exceptions.

Pitfalls to avoid: vague role definitions, missing segregation of duties, absent audit trails for dataset and model changes, ignoring vendor-provider liability splits, and omitting board-level reporting formats.

What Minimizes Regulatory Exposure and Friction?

For most organizations, the hybrid model provides the best balance. It centralizes policy, thresholds, controls, and reporting in an AI Governance Board, while embedding Responsible AI Champions in product teams to maintain speed and context. Federated models suit fast-moving portfolios with mature product risk culture but require strong assurance to satisfy EU AI Act documentation and post-market monitoring. Centralized models provide clarity and are favored by regulators in highly regulated environments, yet may impede delivery unless supported by clear SLAs, pre-approved control patterns, and self-service templates.

Success criteria: publish RACI matrices and role definitions; adopt standard templates for risk assessment and policy change; maintain complete audit trails; report board KPIs with thresholds; staff appropriately by scale; set time-bound SLAs; and explicitly map provider vs. user responsibilities for accountability. These measures align with AI governance, oversight, and RACI AI compliance best practices and reduce the likelihood of non-compliance while preserving product momentum.

Data Privacy, Security, and Responsible AI Considerations

Technical guidance linking GDPR, EU AI Act, and AI security controls to data privacy AI, DPIA, and responsible AI obligations with quantified data governance tasks and auditor-ready metrics.

Constitutional AI systems must align legal compliance, verifiable data governance, strong AI security controls, and responsible AI operations. This section maps mandatory obligations to actionable controls, quantifies tasks for auditability, and provides a DPIA template, a 6‑month security testing plan, and concrete metrics.

Cross-border transfers: use adequacy, SCCs plus supplementary measures, or other Chapter V GDPR tools. Document vendor data flows and encryption keys’ jurisdiction.

Target 100% dataset provenance tagging at ingestion and immutable lineage for every training, fine-tuning, and evaluation dataset.

Regulatory obligations and controls: mandatory vs best practice

Mandatory: GDPR lawful basis and transparency, data minimization and purpose limitation, DPIA for high-risk processing, security of processing, breach notice in 72 hours, processor contracts, international transfer compliance. EU AI Act high-risk: risk management, data governance and provenance, technical documentation, logging and record-keeping, transparency and instructions, human oversight, accuracy, robustness and cybersecurity, post-market monitoring, serious incident reporting.

Best practice: privacy-enhancing technologies, differential privacy, federated learning, model and data cards, red-team exercises, SBOM and model bill of materials, threat modeling, reproducible pipelines, and continuous explainability testing.

- Access controls: RBAC or ABAC with least privilege and MFA; quarterly access reviews.

- Logging: tamper-evident logs for training, evaluation, and inference; 365-day retention for security logs.

- Cryptography: AES-256 at rest, TLS 1.3 in transit, HSM-backed keys, 90-day key rotation.

- Incident response: 24-hour triage, regulator notice within 72 hours (GDPR) and without undue delay for serious AI incidents.

Data governance for audits: provenance, lineage, retention, sampling

Quantified tasks: 100% datasets must carry provenance tags (source, collection method, lawful basis, license, transfer mechanism). 100% pipeline steps captured in lineage graphs with artifact hashes. Retention: raw training data max 12 months; features 24 months; inference logs with personal data 30 days; security logs 12 months. Deletion SLA 99% within 30 days. Sampling: monthly 10% stratified sample of training data for bias and license checks; weekly 1% sample of inference logs for safety and contested outputs; 100% review for special category data before use.

Auditable traceability: dataset ID to model version to deployment ID must be resolvable in <5 minutes via lineage store.

DPIA template and example findings

Use the checklist to document lawful basis, proportionality, data minimization, rights support, risks, and mitigations; map to EU AI Act obligations for high-risk models.

- Acceptable DPIA findings: re-identification risk reduced via DP-SGD and k-anonymity; bias risk mitigated by stratified sampling and equalized odds gap under 5% across key groups; hallucination risk handled with constitutional guardrails and human-in-the-loop for critical decisions; US transfers covered by SCCs with split-key encryption and key control in EU.

DPIA checklist mapped to GDPR and EU AI Act